It's one of the most frustrating feelings in SEO: you've poured time and effort into your new website, but when you search for it on Google... crickets. If you're stuck wondering, "Why is Google not indexing my site?", you're not alone. The problem usually traces back to one of a few culprits—technical hiccups, content that doesn't meet Google's quality bar, or even a simple setting telling search engines to stay away.

This first check is all about getting a quick, clear answer to frame your investigation.

Before you get lost in the weeds of complex tools, a super simple check can tell you almost everything you need to know. The goal here is to figure out the scale of the problem. Is your entire site invisible, or are just a few pages playing hide-and-seek with Google?

The fastest way to get a snapshot is with a site: search.

Just head over to Google and type site:yourdomain.com into the search bar, making sure to replace yourdomain.com with your website's address. This command is a direct request to Google, asking it to show you every single page it has indexed for that specific domain.

The results—or the lack of them—are your first real clue.

What you see after running that command points you down one of two very different paths.

robots.txt file or a "noindex" tag accidentally placed across every page.This first check saves you from chasing ghosts. You now know if you’re hunting for a site-wide catastrophe or a page-level snag. For instance, I've seen plenty of sites where older pages are indexed perfectly, but new blog posts take weeks to show up—a classic sign of a crawl budget or sitemap issue, not a total site block.

To help you quickly identify the issue, I've put together a simple table that summarizes the most common indexing problems and where to look first.

This checklist isn't exhaustive, but it covers the vast majority of cases I've encountered. It gives you a clear starting point for your investigation.

With that initial insight in hand, it's time to roll up your sleeves and dig into Google's own diagnostic tools. This is where Google Search Console (GSC) becomes your best friend. If you haven't set it up yet, stop what you're doing and get it verified now. It’s the only way to get direct, unfiltered feedback from Google about your site's health.

Getting your content indexed properly is the bedrock of any solid digital strategy. For more context on how this fits into a bigger picture, these proven SEO strategies for B2B companies show how a well-indexed site is the launchpad for growth.

And since a sitemap is your direct line to Google, make sure it’s set up correctly by following our guide on submitting a sitemap to Google. Getting these fundamentals right is critical as we move into more advanced troubleshooting.

If a site: search is your flashlight, then Google Search Console (GSC) is the full-blown floodlight for figuring out why Google isn’t indexing your site. This free platform is your direct line to Google, packed with data you simply can't get anywhere else.

Forget the main dashboard for now. We're going straight to the source of truth: the Pages report.

This report, which used to be called the Index Coverage report, is where Google lays all its cards on the table. It tells you exactly what it thinks of every single URL it has found on your domain, splitting them into two buckets: "Indexed" and "Not indexed."

Naturally, we're diving into the "Not indexed" section. Think of it less as a list of failures and more as a categorized set of clues, each one telling you exactly what went wrong.

Once you expand the "Not indexed" details, you’ll see a list of reasons. Each one points to a different problem and requires a different fix, so let's break down what they mean.

Here are the usual suspects you'll run into:

noindex tag was accidentally left on important pages after a site migration or redesign.robots.txt file has a rule that explicitly forbids Google from crawling this URL. While handy for keeping private sections of your site out of search, an overly aggressive rule can accidentally block entire categories of content you want people to find.The real power of GSC is that it doesn't just give you vague error names. It gives you a list of every single URL affected by each specific problem, so you know exactly where to look.

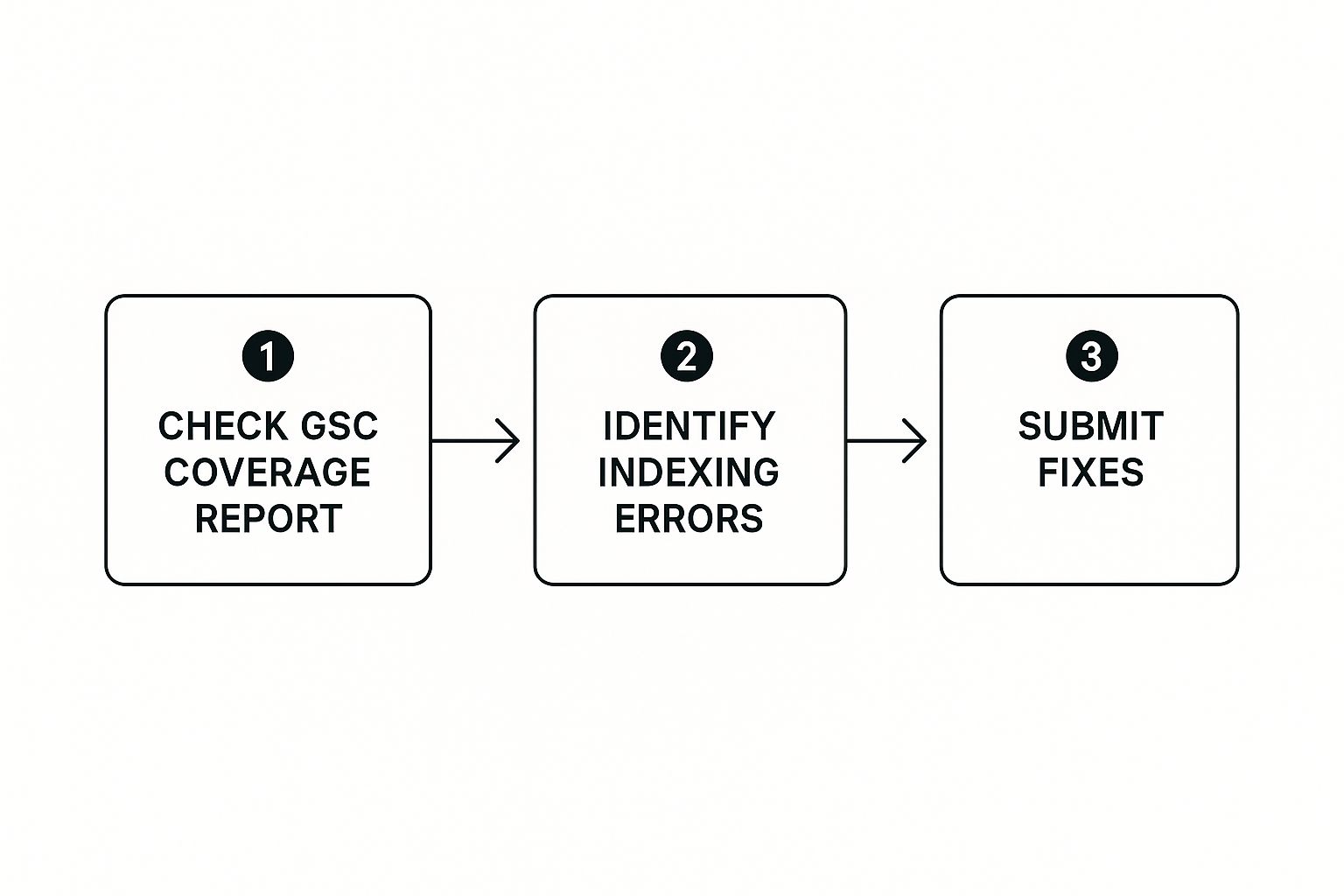

After you’ve diagnosed the root cause, it’s time to fix it. If a stray noindex tag is the culprit, you need to remove it from the page's HTML. If your robots.txt file is too restrictive, you'll need to edit it to allow crawling.

Once you've pushed the fix live, head back to GSC and click the "Validate Fix" button for that error type.

This action is crucial. It signals to Google that you believe you've solved the problem and prompts it to re-crawl the affected URLs. While it doesn't guarantee instant indexing, it absolutely moves your pages to a higher-priority spot in Google's massive crawling queue, which can dramatically speed up the recovery process.

If you find that your entire website is not showing up on Google, the issue is probably a site-wide problem that GSC will highlight right away, often with a manual action or a major crawling anomaly.

By getting comfortable with the Pages report, you can turn GSC from an intimidating dashboard into your most powerful tool for winning the visibility game.

So you've checked every tag, validated your sitemap, and confirmed your server is running perfectly. But your pages are still not showing up. It's a tough pill to swallow, but sometimes the problem isn't on your end at all.

The issue might actually be with Google itself.

Google's infrastructure is a mind-bogglingly complex machine, and just like any massive piece of technology, it’s not immune to glitches. Honestly, just accepting this can save you from frantically changing site elements that aren’t even broken. When your site is technically sound but completely invisible, there's a real chance you're just caught in the crossfire of a wider system issue.

This isn't just a theory, either. It has happened before.

Back in August 2020, the SEO community went into a frenzy over widespread, bizarre fluctuations in search results. It wasn't another algorithm update. It was a major failure deep within Google's own indexing system.

The problem was traced back to Caffeine, the core infrastructure Google has used since 2009 to process and index web content at incredible speeds. A malfunction caused it to generate what was later called a "strange index," where search results were just plain wrong, even though the underlying data was intact. You can read a full breakdown of this indexing system failure on Search Engine Roundtable.

This is a perfect example of how even the most robust systems can have a bad day. When something like this happens, even perfectly optimized websites can see their visibility plummet or new content get stuck in a seemingly endless queue.

The key takeaway here is simple: if you've done everything right and your site still isn't indexed, sometimes the most effective strategy is to just wait. Keep an eye on SEO community news—if other people are reporting the same problems, it’s almost certainly a Google-side issue.

If you think Google is the culprit, patience is your best friend. Panicking and making drastic changes to your site can do far more harm than good. Instead, this is your game plan:

Recognizing when the problem is external helps you build a more resilient SEO strategy. It teaches you to tell the difference between a problem you need to fix and a broader issue you just have to wait out.

If you’re running a small site with just a few dozen pages, you probably don't need to lose sleep over Google crawling everything. It'll get there. But once you scale up—think e-commerce stores with thousands of products or news portals publishing content daily—the game completely changes. Getting every page seen isn't a given; it's a constant battle for Google's attention.

This is where the idea of crawl budget becomes so important. Think of it like this: Google only has so much time and so many resources to crawl the entire internet. Your crawl budget is the tiny slice of that pie it decides to give your website.

On a massive site, that budget can run out long before Googlebot ever finds its way to your less critical pages. The result? Huge chunks of your site get left in the dark, stuck with that frustrating "Discovered - currently not indexed" status. This is a classic reason people complain that Google is not indexing my site.

If Googlebot wastes its limited budget crawling low-value URLs—like faceted navigation filters, internal search results, or ancient tag pages—it simply won't have the bandwidth left to discover your new blog posts or key product pages. That directly hurts your bottom line.

Here's a painful reality: even a page with strong backlinks is completely useless if it isn't indexed. Those links pass zero authority because, as far as Google's search results are concerned, the page doesn't even exist.

And this isn't just a theory. It's a widespread problem. Industry research shows that around 16% of major websites globally aren't fully indexed by Google. Even Google's own experts have been clear they don't promise to index every single page, especially on huge sites where many URLs offer little unique value. For some domains, this can mean as little as 10% of their pages actually make it into the index. You can see how this impacts backlink effectiveness in detailed studies.

To make sure Google spends its time on the pages that actually matter, you need to start actively managing your crawl budget. It’s all about guiding Googlebot away from the digital clutter and pointing it toward your most valuable content.

Here are a few effective strategies I've seen work time and time again:

robots.txt: Use your robots.txt file to tell Google to ignore URLs that provide no real value. Think filtered search pages (/products?color=blue) or print-friendly versions.By actively guiding Googlebot, you're not just tidying up your site; you're strategically directing Google’s finite attention. This ensures your most important pages get crawled frequently and prioritized for indexing, preventing them from getting lost in the noise.

For a deeper dive into these techniques, be sure to check out our complete guide on crawl budget optimization. Mastering this is absolutely key to solving indexing headaches on any large-scale website.

Let's be honest: manually asking Google to index every new page you publish is a losing game, especially if you run an active website. It's a reactive, time-consuming chore.

If you're serious about getting your content seen, you need to shift from one-off requests to a proactive, automated system. This is how you build a reliable indexing pipeline that works for you around the clock, not against you.

The cornerstone of any solid indexing strategy is a clean, dynamic XML sitemap. Think of it as the official map you hand-deliver to Google, showing it exactly where all your important pages are located. But just having a sitemap isn't enough—it needs to be alive.

This means your sitemap must automatically update the second you publish a new post or tweak an old one. While many modern CMS platforms handle this for you, it's something you absolutely have to verify. A static, outdated sitemap is one of the most common—and frustrating—reasons why Google is not indexing your site’s latest content.

A perfect sitemap is non-negotiable, but it’s still a passive tool. You're basically just leaving the map out and waiting for Google to swing by and check it. For urgent or time-sensitive content, you need a more direct line of communication.

This is where Google's Indexing API enters the picture. It was built specifically for content that can't wait, like job postings or live event pages. By using the API, you can ping Google the moment a page goes live or is taken down, often getting it indexed in minutes instead of days.

Key Insight: The Indexing API isn't a silver bullet for every type of content. It comes with strict usage guidelines. But when you use it for its intended purpose, it's one of the most powerful tools in your arsenal for getting timely information into the search results, fast.

Now, managing the Indexing API directly can get pretty technical. That's where third-party automation tools like IndexPilot come in. These platforms act as a bridge, handling all the technical heavy lifting between your site and Google's indexing systems.

Here’s how they typically work:

This approach transforms your indexing process from a manual headache into an automated, hands-off machine. It gets your content in front of eyeballs faster, which is absolutely critical for news sites, e-commerce stores, and active blogs.

To take it a step further, learning how to increase your Google crawl rate can perfectly complement these automation efforts by encouraging Googlebot to visit your site more often in the first place.

And don't forget, technical fixes aren't the only piece of the puzzle. A smart content syndication strategy can also give your indexing speed a serious boost. By getting your content onto other reputable platforms, you create more paths for search crawlers to find your work and recognize its authority.

Ultimately, building a proactive and scalable process is the true fix for achieving long-term visibility.

Even after you’ve poked around in Search Console and tried to clean up your sitemap, some questions just keep popping up. If you're still stuck wondering why Google is not indexing my site, you're not alone. I get these questions all the time.

Let's tackle some of the most common head-scratchers I hear from site owners who feel like they've tried everything.

This is the classic "it depends" answer, but I can give you some real-world benchmarks. It can be anywhere from a few days to several weeks.

If you have a brand-new website but you've set up your internal links properly and have a clean sitemap, Google might find and index your core pages within a week. For a well-established site with high authority, I’ve seen new articles get indexed and start ranking in less than 24 hours.

But here's the red flag: if it’s been over a month and your page is still nowhere to be found, it’s time to stop waiting and start investigating. Something is almost certainly blocking Google.

Expert Tip: The age and authority of your domain matter—a lot. New domains often get stuck in what some SEOs call the "sandbox," where Google is more cautious about indexing new content until it builds some trust. Patience helps, but don't let it turn into inaction.

Nope. And you should be incredibly skeptical of any service that makes that guarantee. A lot of these tools just ping Google’s own Indexing API, which was never meant to be a magic bullet for forcing low-quality pages into the index. It's designed for specific content types, like job postings or livestreams.

While some services can speed up the discovery part of the process, they can't make Google index a page that has thin content, technical errors, or other quality issues. Your first move should always be to fix the root problem. After that, a tool can help get Google's attention faster.

Yes, absolutely. A manual action is one of the most serious issues you can face and it can bring your indexing to a screeching halt. If a human reviewer at Google has flagged your site for spammy tactics or other major policy violations, they can de-index pages or even your entire site.

If you see a sudden, catastrophic drop in your indexed page count, the "Manual actions" report in Google Search Console should be your first stop. Looking for other ways to diagnose the problem? Our guide on how to check if your website is indexed walks through several simple checks you can run right now to get a clearer picture.

Tired of manually checking and submitting your pages? IndexPilot automates the entire process, ensuring your new content gets discovered by Google and Bing the moment it's published. Stop waiting and start indexing by visiting https://www.indexpilot.io to begin your free trial.