Crawl budget optimization is all about making it dead simple for search engines like Google to find, crawl, and index your most important pages. It’s a mix of cleaning up your site's technical health and smartly guiding crawlers away from the low-value fluff. Get this right, and your key pages won't get lost in the shuffle, which has a direct and powerful impact on your overall SEO performance.

Think of Googlebot as a librarian with a very tight schedule. It's visiting your massive library—your website—but it absolutely cannot read every single book on every visit. Instead, it has a system for deciding which aisles to go down and which books to scan. That system is your crawl budget.

This isn't just a concern for massive e-commerce sites anymore. It’s a fundamental piece of modern, technical SEO.

If you let Googlebot waste its time on unimportant pages (like expired promotions, endless tag archives, or broken links), it might never get around to indexing the pages that actually drive your traffic and revenue. Your brand-new blog posts or critical product pages could become practically invisible.

Your site’s crawl budget isn’t a single, static number that Google assigns you. It's a dynamic relationship between two core components that dictate how Googlebot interacts with your domain.

To get a clearer picture of how these two parts work together, here’s a quick breakdown.

This table breaks down the two primary components of crawl budget, helping you quickly understand what influences Googlebot's behavior on your site.

ComponentWhat It Means for Your SiteKey Influencing FactorsCrawl CapacityThis is the technical ceiling—how many requests Googlebot can make without slowing your site down.Server response time, site speed, overall site health, hosting stability.Crawl DemandThis is about how "in-demand" or popular Google thinks your pages are.Page popularity (links/traffic), content freshness, sitemap updates.

A fast, stable site with quick server response times essentially tells Google, "Feel free to crawl more; I can handle it." That increases your Crawl Capacity. At the same time, fresh content, strong internal linking, and high-quality backlinks signal that your pages are worth visiting often, which boosts Crawl Demand.

It's this interplay that really matters. A healthy crawl budget ensures that when you publish or update your best content, search engines can find and process it quickly, not weeks later.

Key Takeaway: A healthy crawl budget doesn't just mean getting crawled more; it means getting the right pages crawled efficiently. It's about quality over quantity, ensuring Google spends its resources on content that matters to your business.

Even if your site doesn't have millions of pages, crawl efficiency is still critical. Common issues like long redirect chains, soft 404 errors, and mountains of low-quality indexed pages can drain your budget, no matter how big or small your site is.

Think of optimizing your crawl budget as a proactive tune-up for your site’s technical SEO health. It's a foundational skill that supports every other SEO effort you make.

For those looking to see how this fits into the bigger picture, exploring broader SEO topics can provide valuable context on how all these elements work together. Ultimately, guiding Googlebot effectively is what separates the sites that get found from the ones that get lost.

Before you can fix a leaky crawl budget, you have to play detective. The truth is, wasted crawl budget often hides in plain sight, masquerading as normal site activity. Pinpointing exactly where Googlebot is getting stuck is the only way to build an action plan that actually works.

Your investigation always starts with Google Search Console (GSC). It's your direct line of communication with Google, and the Crawl Stats report is where you'll find the first clues about how Googlebot really sees your site.

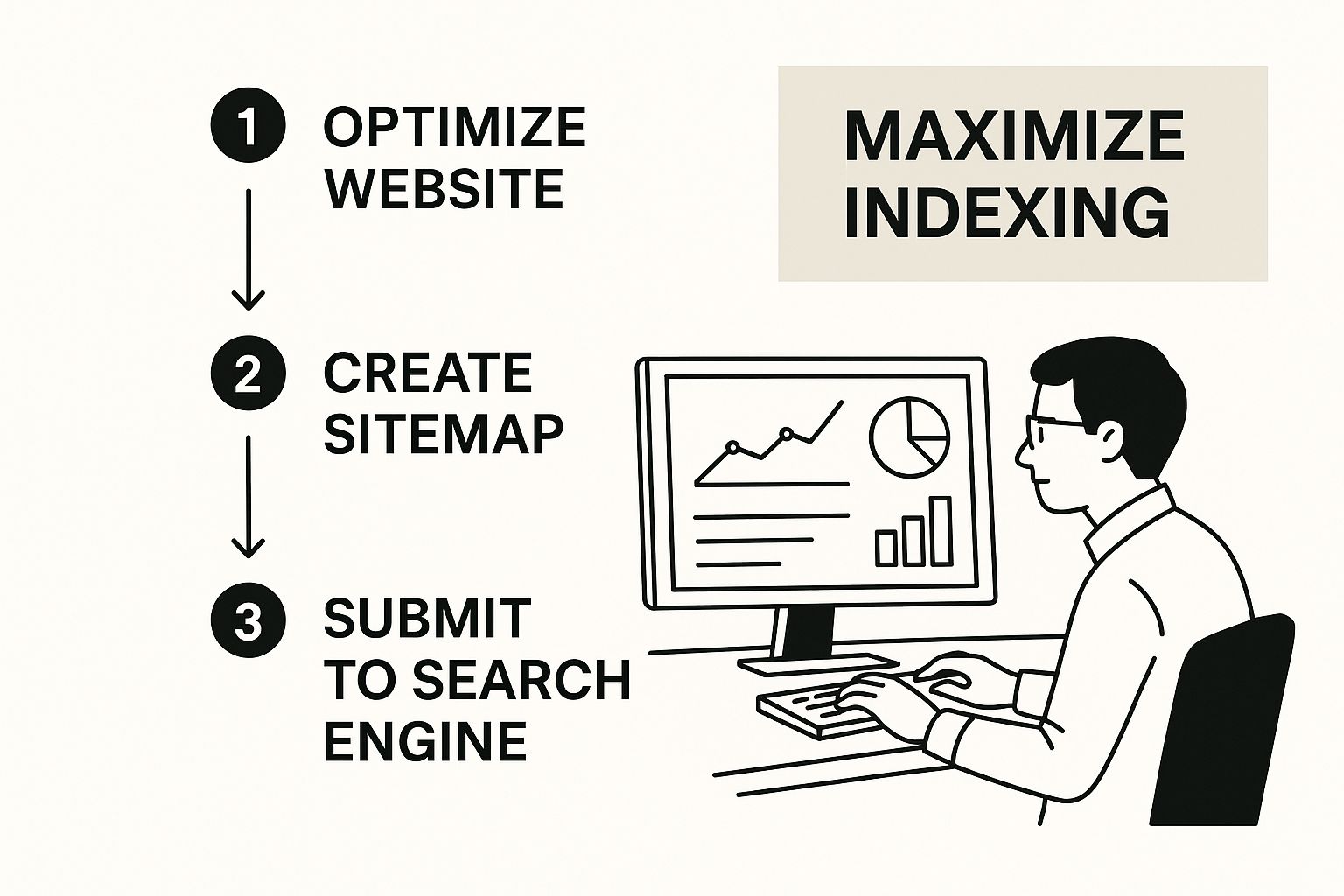

This image gives you a great high-level view of what we're trying to accomplish here: analyzing data to make sure our most important content gets found and indexed.

Ultimately, understanding and guiding crawler behavior is how we maximize indexing. It all starts with the data.

When you pop open the GSC Crawl Stats report, you're looking for trends, not just a single day's numbers. Pay close attention to these three core metrics to spot the red flags.

For instance, if you notice a gradual drop in crawl requests that lines up perfectly with a sharp rise in your server response time, you've found your smoking gun. That’s a classic crawl capacity problem—your server is struggling, and Google is pulling back to avoid overwhelming it.

While GSC gives you a fantastic overview, server log files are the ground truth. These raw data files record every single request made to your server, including every single visit from Googlebot. Analyzing them shows you precisely where your crawl budget is being spent, URL by URL.

Log file analysis can feel intimidating, I get it. But tools like Screaming Frog Log File Analyser can make the whole process much more manageable. When you run an analysis, you’re looking for patterns of pure waste.

Expert Tip: The most powerful insight from log files is the gap between what Googlebot is crawling versus what you want it to crawl. If you discover Googlebot is spending 80% of its time on low-value filtered URLs, you’ve just found a massive budget drain.

This is where you'll find the usual suspects responsible for wasting your crawl budget.

As you comb through your logs and GSC data, keep an eye out for these frequent offenders. They’re notorious for chewing up crawl budget without adding any SEO value.

Spotting these issues is honestly the hardest part of the job. But once you know for a fact that Googlebot is wasting thousands of hits on your /products?color=blue&sort=price URLs, you can finally take targeted action.

For a deeper dive on boosting crawler activity, our guide on how to increase your Google crawl rate lays out the next steps. Finding the problem is step one; fixing it is where the real fun begins.

The whole conversation around crawl budget has flipped. It’s no longer just about blocking Google from crawling junk pages; it’s about empowering Googlebot to crawl more by being ridiculously fast. Pure, unadulterated website speed is now one of the biggest levers you can pull to expand your crawl capacity.

Think of it like this: when Googlebot shows up, it takes the temperature of your server. A slow, laggy server response is a huge red flag, telling Googlebot to back off to avoid overloading it. But a snappy, quick-to-respond server? That’s a green light. It gives Googlebot the confidence to ramp up, open more connections, and chew through more of your content in the same amount of time.

At the heart of website speed is a metric called Time to First Byte (TTFB). This is simply how long it takes for a browser—or Googlebot—to get the very first piece of data from your server after it makes a request. A high TTFB is a dead giveaway of a slow server, and it’s a major choke point for your crawl budget.

Lots of things can cause a sluggish TTFB, but the usual suspects are cheap hosting, bloated databases, and poor caching. Fixing these isn't just a win for your human visitors; it’s a direct invitation for Googlebot to come in and explore your site more thoroughly.

Let's pop the hood and look at the engine room of your website—the backend stuff that directly impacts your server's performance and, by extension, your crawl budget.

This shift in focus—valuing performance over just the sheer number of pages—is a big deal. We're hearing it directly from Google's own Search Relations team. It used to be that crawl budget was a headache reserved for massive sites with over a million pages. Now, the spotlight is on how efficiently any site, regardless of size, can serve its content. For WordPress users, getting a handle on WordPress speed optimization is one of the most valuable skills for boosting crawl capacity.

A website's crawl budget is no longer determined just by its size, but by the server's ability to respond quickly. Database efficiency and a low-latency infrastructure are now the critical factors in successful crawl budget optimization.

When you make your site faster and more resilient, you aren't just improving the user experience. You are actively increasing your crawl capacity, which allows Google to find and index your content faster and more completely.

And when your site is fast, you can be much more ambitious about getting new content indexed. Check out our guide on instant indexing APIs to see how a speedy site and proactive indexing go hand-in-hand.

Alright, so your site is lightning-fast and your server can handle whatever Googlebot throws at it. You've won half the battle. Now it’s time to shift your focus from raw capacity to smart demand. The goal is no longer just about being able to handle crawlers; it's about actively steering them toward your money pages.

Think of it this way: if you let Googlebot wander around your site without a map, it's going to get lost. It will inevitably burn through its allotted time exploring low-value corners, leaving your most important content to collect dust. This part is less about technical horsepower and more about strategic direction.

Your robots.txt file is your first and most powerful tool for this job. It’s like having a bouncer at the door of your website, telling search crawlers exactly which areas are off-limits. Its main purpose in crawl budget optimization is to stop bots from ever entering sections that offer zero SEO value.

What are the usual suspects? URL parameters are a big one, especially those generated by faceted navigation and internal site search. An e-commerce site, for instance, can create millions of unique URLs from simple filters like size, color, and brand. Letting Google crawl all of those is a disaster for your budget.

A few simple Disallow directives can make a world of difference:

Disallow: /*?color=Disallow: /*?size=Disallow: /search/

By adding rules like these, you’re telling Googlebot not to even bother requesting those pages. This saves that precious budget for the URLs that actually matter—your core category and product pages.

While robots.txt is a sledgehammer that blocks crawling entirely, the noindex and nofollow meta tags offer more surgical control. The noindex tag is your way of telling Google, "Hey, you can look at this page, but please don't add it to your search results."

This is perfect for pages that are necessary for users but have no place in the SERPs. Think login pages, post-purchase "thank you" pages, or thin content you can't get rid of.

The nofollow attribute, on the other hand, tells Google not to follow a specific link or pass authority through it. It’s incredibly useful for preventing Googlebot from getting stuck in "infinite spaces," like a calendar with an endless "next month" link that goes on forever.

Important Distinction: A page blocked by robots.txt is invisible to Google, so it can't see or process any noindex tag on that page. Use robots.txt to fence off entire sections you want hidden from crawlers. Use noindex for individual pages you're okay with Google crawling, but not indexing.

Your XML sitemap should be a direct, curated roadmap you hand to search engines. It's a list of your best, most valuable, indexable URLs. It is absolutely not a place to dump every single URL your site has ever generated.

A bloated sitemap filled with redirects, non-canonical URLs, or pages you've blocked in robots.txt just sends mixed signals and wastes crawl budget.

<lastmod> tag to signal when a page was last updated. This encourages Googlebot to come back and recrawl your fresh content.Large websites, in particular, face huge challenges with crawl budget because search engines have to spread limited resources across potentially millions of URLs. For them, aggressive management—including content audits and blocking low-value pages with robots.txt—is non-negotiable. It's how you ensure your revenue-driving pages get crawled and indexed first.

Ultimately, your internal linking structure is the most powerful and organic way to signal a page's importance to Google. Pages that receive a lot of internal links from authoritative parts of your site are clearly flagged as important.

Think about it. If you launch a new product page and link to it from your homepage, a main category page, and a popular blog post, you're sending very strong signals that this page deserves attention.

On the flip side, a page buried five clicks deep with only a single, lonely link pointing to it is basically screaming, "ignore me." If you want to dive deeper into the nuts and bolts of getting Google's attention where it counts, you might want to learn how to request that Google crawl your site in our detailed guide.

If you're only looking at server logs and Google Search Console reports, you're playing defense. It's a great start, for sure, but you're fundamentally reacting to crawl budget issues after they've already happened. To truly get ahead, especially on a large or complex site, you need to shift from reacting to problems to proactively stopping them in their tracks.

This is where dedicated crawl management tools change the game.

Modern platforms are designed to automate all the tedious parts of crawl analysis. Instead of drowning in raw log data, you get a clean, real-time picture of what search engine bots are doing on your site. Think of it as a 24/7 watchtower that flags issues the second they pop up.

Imagine discovering a massive redirect chain weeks after a clumsy site update, knowing it has already wasted thousands of Googlebot's hits. Or, picture a spike in 404 errors from a minor code push that goes completely unnoticed until your rankings start to tank. These are precisely the scenarios that a proactive, tool-driven approach is built to prevent.

Instead of waiting for GSC to slowly update, these platforms monitor your site’s health and crawler activity continuously. That shift is absolutely crucial for protecting your crawl budget.

This dashboard from IndexPilot is a perfect example of how crawl data can be visualized for quick, actionable insights. You can see trends in your submitted URLs and their indexing status at a glance.

When data is laid out this clearly, you can immediately spot anomalies—like a sudden drop in successfully indexed pages—and dig into the root cause right away. No more waiting around.

One of the most impactful features of modern crawl management tools is automated sitemap handling. Let's be honest, a static XML sitemap that you update manually is almost always stale on an active website. It's sending outdated signals to search engines, which is the last thing you want.

Tools like IndexPilot solve this by connecting directly to your site and watching for changes in real-time.

When you publish a new blog post, update a product page, or change a URL, the platform can automatically update your sitemap and ping search engines using protocols like IndexNow. This guarantees that Google and Bing always have a fresh, accurate map of your most important content.

This simple change transforms your sitemap from a passive, forgotten file into an active tool for guiding crawl demand where you want it to go. For a deeper dive, check out our guide on sitemap optimization best practices.

Not all crawl management platforms are the same. When you're looking at different options, you need to think about the specific challenges your website faces.

Feature to ConsiderIdeal for...Key Questions to AskLog File AnalysisLarge, complex sites where you suspect crawl traps or inefficiencies.How easily can it process huge log files? Does it let you segment crawl data by bot type?Real-Time MonitoringNews sites, e-commerce stores, and job boards where content changes fast.What kinds of issues trigger alerts? Can I customize the notifications?Automated Sitemap & IndexingAny site that adds or updates content often.Does it support IndexNow for instant indexing? How does it find new or updated pages?

By adopting one of these advanced platforms, you can elevate your crawl budget optimization from a painful, periodic task to a smooth, continuous process that runs on its own. This proactive stance gives you the power to manage even the most complicated websites efficiently, ensuring your most valuable pages are always ready for search engines. It's about spending less time digging for problems and more time capitalizing on opportunities.

Even with a solid game plan, you're bound to run into questions when you start digging into crawl budget. We hear a lot of the same ones, so I've gathered the most common queries right here. The goal is to give you direct, no-fluff answers to help you get past these hurdles and start applying these strategies with confidence.

This is a classic question. While it’s true that massive sites—think over a million pages—are the poster children for crawl budget headaches, Google's focus has shifted in a big way. These days, a small but slow website riddled with server errors can have far worse crawl problems than a huge, yet lightning-fast and efficient one.

Simply put, efficiency is the new scale.

The real challenge for large sites isn't just the page count; it's the sheer volume of low-value URLs they can accidentally generate. Think of all the combinations from faceted navigation or endless tag pages. That's what dilutes the budget. This makes it absolutely critical for larger domains to actively steer Googlebot with a clean robots.txt file, perfect sitemaps, and a rock-solid internal linking strategy.

Time and again, the biggest culprits draining your crawl budget are the same technical missteps. The good news? Plugging these leaks is often the fastest way to see a positive change.

Here are the usual suspects I find during audits:

The only way to know for sure where your budget is being wasted is by regularly auditing your server logs. The data doesn't lie, and it will point you directly to the source of the problem.

There’s no single right answer here—it really depends on your site's size and how often you're publishing or changing content. But we can follow some solid rules of thumb.

For most websites, a monthly check-in is plenty. A quick look at the Crawl Stats report in Google Search Console, maybe paired with a brief server log analysis, will keep you on top of things.

However, if you're running a large, dynamic site like an e-commerce store or a news publisher, you need to be more vigilant. A weekly or even daily review is much wiser, especially right after a major site migration, redesign, or feature launch.

Absolutely. In fact, your internal linking structure is one of the most powerful—and most underrated—levers you can pull to manage crawl demand.

Pages that get a lot of internal links, especially from other important pages on your site, send a strong signal to Google: "This page matters." This encourages more frequent crawls. For more on how digital strategies connect, the meowtxt blog often has some great discussions.

Think of a logical internal linking architecture as a map you're handing to Googlebot, guiding it straight to your most valuable content. On the flip side, if you bury a critical page deep within your site with hardly any links pointing to it, you're telling Google it's not a priority. Strategic linking ensures your crawl budget gets spent on the pages you actually want to rank.

This fundamental link between crawling and indexing is everything. To get a clearer picture of how crawlers discover and process your content, our guide on how search engine indexing works breaks it all down.

Ready to stop reacting to crawl issues and start proactively managing your site's indexing? IndexPilot automates the entire process, from real-time sitemap monitoring to instant indexing pings. Take control of how search engines see your site and ensure your most important content is always found first. Start your free 14-day trial of IndexPilot today.