So, you’ve launched your new website. You’re excited, ready for the traffic to roll in… and then, crickets. When you search for your site on Google, it’s nowhere to be found. It’s a frustrating moment, but don't panic—this is an incredibly common issue, especially for new sites, and it's almost always fixable.

The root of the problem is usually indexing. Think of Google’s index as a gigantic, planet-sized library. If your website isn’t cataloged in that library, no one can find it, no matter how hard they search. Before we jump into complex technical fixes, the first step is to figure out if Google even knows your site exists.

Right now, we're just in diagnostic mode. We’re not fixing anything yet. We’re simply gathering the essential information to understand what’s going on. This is about establishing a clear picture of your current status so we can make smart decisions later.

The quickest and easiest way to check if your site is in Google's index is with a special search command called an operator.

Just go to Google and type site:yourdomain.com into the search bar. Make sure to replace "yourdomain.com" with your actual website address.

This simple test is your starting point. Seeing no results confirms a fundamental indexing problem that needs your immediate attention. After all, you can't get a piece of the 5+ trillion searches Google handles every year if your site isn't even in the game. You can dig deeper into Google's massive search ecosystem to understand just how much visibility you're missing out on.

Before we go any further, it's time to run a few more quick checks. These initial steps can often reveal the most common culprits behind your site's invisibility.

Perform these initial checks to diagnose why your website isn't visible on Google.

Initial CheckHow to Perform ItWhat the Result MeansSite SearchGo to Google and search for site:yourdomain.com.No results? Your site isn't indexed. Seeing results means it is indexed, but may not be ranking.Robots.txt FileGo to yourdomain.com/robots.txt.Look for Disallow: /. If you see this, you're telling Google not to crawl your entire site.Noindex Tag CheckView the source code of your homepage (Ctrl+U or Cmd+Option+U).Search for <meta name="robots" content="noindex">. If it's there, you're blocking indexing.Manual ActionsCheck the "Manual Actions" report in Google Search Console.If you see an action, Google has penalized your site, which can cause deindexing.

Completing this checklist gives you a solid foundation. If you've found a "Disallow" rule or a "noindex" tag, you've likely found your smoking gun. If not, it's time to dig deeper with the right tool.

Whether your site showed up in the "site:" search or not, your very next step is to set up Google Search Console. This isn’t just a recommendation; it’s an absolute necessity for anyone who's serious about their website’s performance in search.

Think of it as the official communication channel between you and Google. It provides priceless data, sends you alerts when things go wrong, and gives you the tools to understand—and improve—your site's visibility. Setting up Google Search Console is the bridge from guessing to knowing. It gives you direct access to the reports that will pinpoint exactly why your website isn't showing up.

Key Takeaway: Don't panic if your site is invisible. The "site:" search gives you an instant answer, and Google Search Console provides the tools to diagnose the root cause. This initial diagnostic step is the foundation for every fix we'll cover next.

Now that you have Google Search Console set up, you've unlocked the command center for your website's health. This is where you stop guessing about indexing issues and start getting concrete answers directly from Google.

Forget about all the noise and overwhelming data for a minute. Your first stop should be the Pages report, tucked under the "Indexing" section of the left-hand menu. This report is gold. It shows you every page Google knows about and, more importantly, all the ones it decided not to index.

That "Not indexed" list? That's your new to-do list.

Diving into the "Not indexed" tab, you'll see a bunch of technical-sounding reasons. Don't let them intimidate you. They're just Google's way of telling you what's wrong. Let's break down the two you'll see most often.

1. Discovered - currently not indexedThis one is a classic. It’s frustrating, but it's also incredibly common. It means Google knows your page exists—it followed a link from somewhere else—but it just hasn't gotten around to actually crawling it yet.

Think of it like this: Google saw your page, put it on a massive to-do list, and walked away.

2. Crawled - currently not indexedOkay, this one stings a bit more. It means Google actually took the time to visit your page, read your content, and then made a conscious decision to pass on it. It looked at what you had to offer and said, "Nope."

Expert Insight: If you see a huge number of pages flagged as "Crawled - currently not indexed," that’s a massive red flag. Google isn't just judging one page; it has quality concerns about your entire site. The fix isn't about tweaking a single article; it's about raising your overall content game.

The Pages report gives you the 10,000-foot view, but the URL Inspection Tool is your magnifying glass for getting up close. You can find it right in the top search bar of GSC. Just grab the full URL of a page that isn't indexed and paste it in.

This tool gives you a full diagnostic report for that one specific page, showing you exactly how Google sees it. You'll get straight answers to critical questions:

noindex tag in the page’s HTML—a direct order telling Google to stay away.Imagine you find out your most important service page has a "Crawled - currently not indexed" error. You could spend weeks rewriting it, but a quick check with the URL Inspection Tool might reveal a developer accidentally left a noindex tag on it during a site update. Boom. Problem solved in minutes, not months.

By combining the big-picture view from the Pages report with the surgical precision of the URL Inspection Tool, you can finally stop guessing. You now have a clear path to figuring out exactly why your pages aren't getting indexed and what you need to do about it. If you want a more detailed breakdown, you can learn how to check if a website is indexed with our comprehensive guide.

When your site isn't showing up on Google, it's easy to jump straight to worrying about content or keywords. But from my experience, the root of the problem is often something much more fundamental—technical roadblocks that stop Google's crawlers dead in their tracks.

Think of it like this: if Googlebot can't even get through your digital front door, your brilliant content might as well be invisible.

Before you can fix these issues, you need to know how to spot them. It all starts with putting on your technical SEO hat and understanding how Google really sees your site. The first stop? Your robots.txt file.

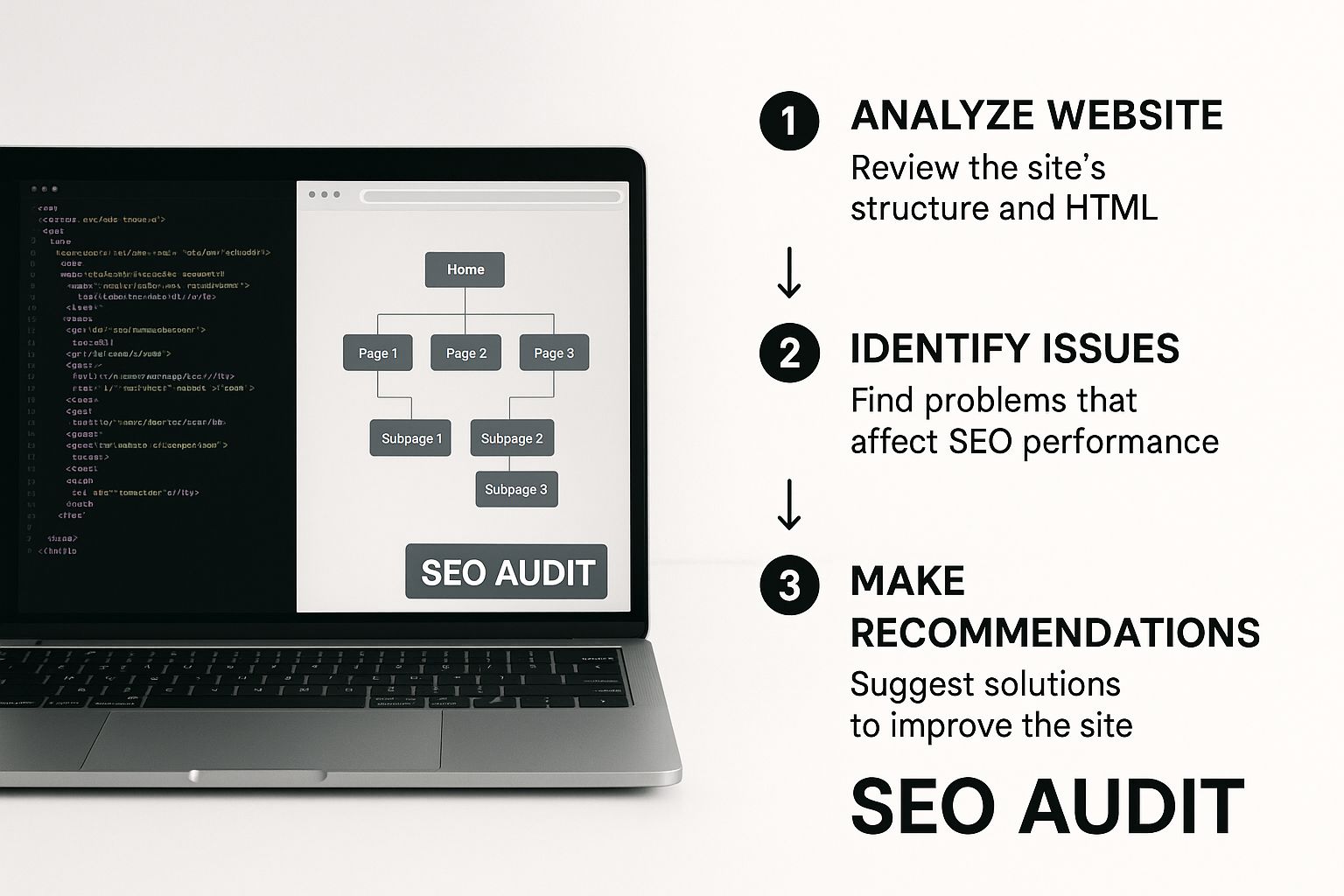

This image gives you a solid visual of what a proper technical audit looks like. It’s all about clearing a path for the crawlers by checking the code, sitemaps, and structure.

Let's break down exactly what you need to check.

The robots.txt file is a tiny but mighty text file that lives at the root of your domain (like yourwebsite.com/robots.txt). Its job is to give search crawlers simple instructions on which parts of your site they should and shouldn't access. While it's incredibly useful, it's also incredibly easy to mess up.

One of the most devastating mistakes I see is an overly aggressive "Disallow" rule. A single, simple line like Disallow: / tells every crawler to stay out of your entire website. It's the digital equivalent of putting a "Condemned" sign on your front door.

Go check this file right now. Are you accidentally blocking important directories like /blog/ or /products/? I've seen simple typos in this file render huge, critical sections of a site completely invisible to Google.

While your robots.txt file tells Google where not to go, an XML sitemap does the exact opposite. It’s a clean, organized roadmap of every single page you want Google to find and index. It's basically a formal invitation for Googlebot.

Without a sitemap, Google has to find your pages by following one link to the next, which is slow and messy, especially for new or large websites.

Key Takeaway: A clean, submitted, and error-free XML sitemap is one of the fastest ways to tell Google, "Hey, all my important stuff is right here. Come and get it."

A logical site structure isn't just for your human visitors; it's absolutely critical for search engine crawlers, too. A flat, well-organized site architecture lets Googlebot move efficiently from page to page, discovering and indexing your content as it goes.

Picture your homepage as the trunk of a tree. Your main categories are the big branches, and your individual posts or product pages are the leaves. A crawler should be able to get to any leaf in just a few "hops" from the trunk.

This is where internal linking becomes your secret weapon. Every time you link from one page on your site to another, you're creating a clear path for crawlers and passing along authority. Linking from a powerful page (like your homepage) to a newer page tells Google that the new page is important and worth paying attention to.

Crawler traps are nasty structural problems that can cause a search bot to get stuck in an endless loop, completely wasting your site's precious crawl budget. Your crawl budget is the finite amount of time and resources Google will spend crawling your site. If it gets wasted on dead ends, your most important pages might never get seen.

Some of the most common traps include:

?color=red&size=large&brand=x).Fixing these usually requires a bit of technical work, like using canonical tags to point all the duplicate URLs to a single master version, or tweaking your robots.txt to block crawlers from URLs with certain parameters. Optimizing your crawl budget is so vital that we've written an entire guide on how to increase your Google crawl rate.

Technical SEO can feel intimidating, but getting these fundamentals right—robots.txt, sitemaps, and site structure—solves a huge chunk of the problems that keep sites from getting indexed. By clearing these technical hurdles, you're essentially rolling out the welcome mat for Google's crawlers.

If you’ve wrestled with all the technical gremlins and your pages still aren’t getting indexed, it’s time for a reality check. Sometimes, the reason your site isn't showing up on Google has nothing to do with code or crawlers.

It’s the content itself.

Google has zero incentive to index pages it considers thin, duplicative, or just plain unhelpful. Its entire business model relies on giving users the best possible answer, and if your content doesn't meet that standard, it's not getting a spot in the index. Simple as that.

Once you’ve ruled out technical problems, the real work on content quality and understanding the role of content in SEO begins.

Let's break down the most common content-related roadblocks. I see these trip up even experienced marketing teams, but the fixes are usually straightforward once you know what to look for.

Content ProblemWhy It HappensHow to Fix ItThin ContentThe page offers little to no unique value. It might be short, or just rehash information found elsewhere without adding new insights, data, or examples.Bulk up the content with original research, customer testimonials, unique images, or expert commentary. Go deeper on the topic than competitors.Duplicate ContentIdentical or nearly identical content exists on multiple URLs, either on your site (e.g., e-commerce filters) or copied from another website. This confuses search engines.Use a canonical tag (rel="canonical") to point all variations to a single "master" page. For syndicated content, ensure the original source is credited properly.Poor Search Intent MatchThe content doesn't align with what a user actually wants when they type in a specific query. For example, offering a historical article for a "best of" search.Analyze the top-ranking pages for your target keyword. What format are they? Are they informational, commercial, or transactional? Adjust your content to match that intent.Low-Quality or AI-Generated ContentThe text is generic, lacks a human voice, contains factual errors, or reads like it was spun by a robot. Google's helpful content systems are designed to sniff this out.Rewrite the content from a human-first perspective. Add personal anecdotes, specific examples, and ensure it's edited for clarity, accuracy, and tone.

By systematically identifying and fixing these issues, you're not just trying to please an algorithm; you're creating a better experience for your users, which is exactly what Google wants to reward.

Of all the content issues, failing to match search intent is the most critical. You can have a perfectly written, 10,000-word masterpiece, but if it doesn't answer the user's underlying question, it's dead in the water.

Think about it. Someone searching for "best running shoes" is in a buying mindset. They want reviews, comparisons, and lists. If your page is a deep dive into the history of a single shoe brand, you've missed the intent completely. Google knows this and won't rank your page for that query.

The competition is brutal. An estimated 58.5% of Google searches in the U.S. end without a click, because users get their answer directly on the search results page. This makes it absolutely essential that your content is so well-aligned with user needs that it becomes a candidate for a featured snippet or an answer in an AI overview.

Ultimately, fixing content issues means shifting your mindset from, "What can I publish today?" to, "What unique value can I provide to a searcher?"

When you address thinness, eliminate duplication, and master search intent, you create content that Google isn't just willing to index—it's eager to.

And once you've published that fantastic new or updated content, don't just wait around. Make sure Google sees it right away. Our guide on https://www.indexpilot.io/blog/instant-indexing shows you how to close the loop and get your valuable pages noticed fast.

Fixing indexing errors is a great start, but it's fundamentally a reactive game. The real win comes from shifting your mindset from "fix-it" mode to a proactive "maintain and grow" strategy. This is all about actively managing how and when Google discovers your content, instead of just waiting for problems to pop up in Search Console.

Think of it this way: a proactive approach is about consistently sending positive signals that tell Google your site is active, valuable, and deserves attention. When a website isn't showing up on Google, it's often because it has simply fallen off the search engine's radar. A proactive strategy makes sure you stay on it.

When you publish a killer new blog post or overhaul a critical landing page, don't just cross your fingers and hope Google finds it. Give it a direct nudge. This is exactly what the URL Inspection Tool in Google Search Console is for.

Just paste the URL of your new or updated page into the inspection tool. After it runs a quick analysis, you'll see a button to "Request Indexing." Clicking this essentially puts your page into a priority queue for Google's crawlers. It’s no magic bullet for instant indexing, but it’s a powerful signal that tells Google, "Hey, look over here—something new and important is ready."

Backlinks are so much more than a ranking factor; they are discovery paths. Every time a reputable, relevant website links to your content, it acts as a strong endorsement. Google's crawlers follow these links, and a link from a high-authority site is like a trusted referral telling them your page is worth visiting and indexing.

Here's a simple way to think about it:

This external validation encourages more frequent crawling, which is a must-have for getting new content found quickly. Your goal should be earning links from sites within your niche to build a contextually relevant backlink profile.

Proactive Tip: Don't just publish and pray for links. Actively share your best work with industry peers, contribute to expert roundups, and build resources that other sites will naturally want to reference. Each new backlink is a brand new path for Google to find you.

Google's crawlers learn from your behavior. If your publishing schedule is all over the place—one article this month, five the next, then radio silence for two months—Googlebot has no incentive to visit your site regularly. Why would it?

But if you get into a consistent rhythm, whether it's one new article a week or two a month, you train Google to come back more often. This predictability directly increases your crawl rate because the bot starts to expect fresh content. A steady cadence is a huge trust signal that shows your site is alive and actively maintained.

Your XML sitemap is the official roadmap of your website. A proactive strategy involves more than just submitting it once and forgetting it. Thankfully, most modern CMS platforms like WordPress can update your sitemap automatically whenever you publish new content. If you haven't enabled this feature, do it now. It's critical.

Once it's automated, your job shifts to monitoring its health inside Google Search Console. Make a habit of checking the "Sitemaps" report for errors. A clean, error-free sitemap ensures Google can always find an updated list of all the pages you want it to see.

For anyone who wants to go deeper, you can explore guides on how to submit your website to search engines to make sure you're using every tool at your disposal. This mix of manual requests, consistent publishing, and automated monitoring is the backbone of a strong, proactive indexing strategy that will keep you visible and ahead of the competition.

Even after you've run all the right technical checks and audited your content, a few questions about Google visibility almost always pop up. It's frustrating when you've done the work but still feel in the dark. Let's clear up those lingering uncertainties once and for all.

Think of this as filling in the last few gaps in your knowledge so you can move forward with total confidence.

Honestly, there's no single magic number. For a brand-new website, getting indexed can take anywhere from a few days to several weeks. Patience is a big part of the SEO game, but that doesn't mean you're powerless.

You can give Google a major hint by submitting a clean XML sitemap directly through Google Search Console. For your most important pages—think your homepage or key service pages—using the "Request Indexing" feature in the URL Inspection Tool is like a direct nudge, telling Google, "Hey, look at this now!"

It’s a gut-wrenching feeling. One day your site is indexed and getting traffic, the next it’s gone. This isn't just random bad luck; something specific almost certainly changed.

Start your investigation by checking these usual suspects:

noindex tag, effectively telling Google to drop your pages.Your first move should be to use the URL Inspection Tool on your homepage. It will tell you exactly what Google sees right now. For a more thorough breakdown of these issues, our guide on common website indexing issues is a great resource.

The technical answer is yes. Google can discover and index a website without a single backlink, especially if you’ve submitted a sitemap. But focusing on that misses the entire point.

Backlinks are essentially votes of confidence from other websites. They signal to Google that your content is authoritative and important, which encourages it to crawl and index you more quickly and more often. A site with zero backlinks is going to have an incredibly tough time getting visibility for any keyword worth ranking for.

Key Takeaway: Indexing is possible without backlinks, but ranking and achieving real, meaningful visibility isn't. Once you've fixed your foundational indexing problems, learning how to rank higher on Google through a solid backlink strategy is the critical next step.

Absolutely. A manual action is one of the most severe reasons a site might vanish from Google's search results. This isn't an algorithm update; it's a direct penalty applied by a human reviewer at Google who believes your site has violated their quality guidelines.

The fallout can be anything from a sharp drop in rankings to the complete removal of your pages—or your entire site—from the index. If you even remotely suspect this is the problem, head straight to the "Manual actions" report in Google Search Console. It’s the only place to get a definitive answer.

Stop waiting for Google to find your content. IndexPilot automates the entire indexing process by monitoring your sitemap and instantly notifying search engines of new or updated pages. Ensure your hard work gets seen faster and drive more organic traffic. Start your free 14-day trial of IndexPilot and put your indexing on autopilot.