It’s a common misconception that Google finds and indexes your site’s changes the moment you hit "publish." The reality is a lot less instant. Googlebot runs on its own complex schedule, which means your most important updates—a critical price change, a new service page, or a major content overhaul—can sit unnoticed for days or even weeks.

Knowing when to proactively nudge Google for a recrawl is a fundamental part of smart SEO.

This is what separates reactive webmasters from proactive SEOs. It’s not about spamming the "request indexing" button every day. It’s about using it strategically when the stakes are high, like a priority pass to get Google’s attention when it matters most.

Understanding when and how to request a recrawl is a crucial component of effective Search Engine Optimisation (SEO) and ensures your hard work actually gets seen and ranked.

Certain situations just can't wait for Google's regular crawl cycle. You need to act. Here are the big ones I see all the time:

noindex tag blocking your most important service pages from showing up in search. After you remove it, a manual recrawl request tells Google, "Hey, I fixed the problem, please come re-evaluate this page and get it back in the index ASAP!"Key Insight: The entire point of a manual recrawl request is to shorten the delay between you making a change and that change being reflected in Google's search results.

In competitive niches, this speed is a massive advantage. I’ve seen proactive URL submissions cut indexing delays by up to 50% compared to just sitting back and waiting for Googlebot to show up on its own schedule. It’s a simple action with a tangible impact on how quickly you see results.

When you need to get a single, high-priority page indexed fast, your best bet is the URL Inspection Tool right inside Google Search Console. This is my go-to method for that brand new cornerstone article, an updated service page, or a product listing I’ve just finished optimizing.

It’s incredibly straightforward. Just head over to your Search Console property, paste the full URL into the search bar at the top, and hit Enter. Google then runs a live check against its index and gives you a real-time status report.

Think of this as the first diagnostic step for any manual google request recrawl.

The tool will tell you flat out whether the URL is on Google or not. If it is, you’ll see all the details—its current indexed status, mobile usability, and any schema markup it found. If it’s not on Google, it usually gives you a clue why, like a noindex tag or some other crawl anomaly.

Here’s what you want to see—a report with all green checkmarks.

Those green checks are your confirmation that the page is in Google's index and doesn't have any major technical issues holding it back from search results.

This tells you the page is "live," but it doesn't mean Google has seen your most recent changes. That's where the actual request comes in.

Pro Tip: Don't just submit and walk away. Always use the "Test Live URL" feature first. This forces Google to check the page as it exists right now, helping you catch technical errors or rendering problems before you ask for an official recrawl.

If you've updated the page, or if it wasn't on Google in the first place, you'll see a button labeled "Request Indexing." Clicking this is like handing your URL to a bouncer to get into a priority queue.

It's crucial to understand what this button does and what it doesn't do. It signals to Google that something important has changed and is ready for another look, but it’s not a golden ticket for instant indexing. The actual recrawl is still influenced by things like your site’s overall authority and how frequently you publish fresh content. It can take anywhere from a few days to a couple of weeks. For a deeper dive, Conductor.com explains how Google's recrawl process works.

And please, don't repeatedly mash the button. It won't speed anything up and just burns through your daily submission quota for no reason.

Let's be realistic. When you've just overhauled dozens or even hundreds of pages, manually inspecting every single URL is a non-starter. It’s just not practical.

This is exactly where sitemaps shift from a simple SEO best practice to your most powerful tool for requesting a bulk google request recrawl.

Instead of poking Google one URL at a time, a sitemap lets you bundle all your updated pages into a single, efficient package. The real magic, though, is in the <lastmod> tag. This little timestamp in your sitemap file tells Google the exact date and time you last touched a page.

When Googlebot recrawls your sitemap and sees a fresh <lastmod> date, it’s a massive signal that there’s new content worth its attention. Keeping this tag accurate is the key to making this whole process work. If you're a bit fuzzy on the technicals, our guide on how to create a sitemap walks you through it.

Once your sitemap is up-to-date and correctly reflects all your recent changes, you just need to give Google a nudge. Head over to Google Search Console.

In the left-hand menu, find the "Sitemaps" section. All you have to do is enter your sitemap's URL and hit "Submit." That’s it. Google will then re-process the file, paying special attention to any URLs with new modification dates.

Key Takeaway: Resubmitting your sitemap isn't just a generic request for Google to crawl your site. It's like handing the crawler a map with bright red circles around every single page you've changed, making its job faster and more efficient.

This method works hand-in-glove with the URL Inspection tool. Manual inspection is perfect for one or two high-priority pages you need indexed right now. But for large-scale updates, a site migration, or launching a new language version of your site, sitemaps are the only sane way to do it.

For an even savvier approach, think about using separate sitemaps for different parts of your site. You could have one for blog posts, another for product pages, and a third for your documentation. This segmentation gives you more granular control, allowing you to signal updates for a specific section without resubmitting everything at once.

Knowing which tools to use is one thing, but learning how to combine them into a smart, coordinated strategy is what gives you a real edge.

The secret is understanding that different situations call for different tactics. There's no single "best" way to get a google request recrawl—there's only the best way for your specific, immediate goal.

For example, let's say you just fixed a glaring factual error or a broken link on a single, high-traffic page. You need speed. This is the perfect job for the URL Inspection Tool. Its direct, one-off nature ensures Google sees your most critical fix as fast as humanly possible.

But what if you've just updated the pricing across your entire 500-product e-commerce catalog? Manually inspecting every single URL would be a nightmare. That's a clear-cut case for a sitemap resubmission. Updating the <lastmod> tag for all affected products and resubmitting the sitemap is the only practical way to handle bulk changes like that.

The savviest SEOs I know almost always use a hybrid approach. Picture this: you've just wrapped up a major content overhaul on your site's top 10 most important pages. These are your money-makers, your core service pages, the ones that drive the most conversions.

Here’s how you can combine methods for the fastest, most effective results:

<lastmod> dates for all the pages you changed. Resubmit it through Search Console. This acts as your safety net, catching everything the individual requests might miss and telling Google about the broader update.This tiered strategy respects both your time and Google's resources. You're giving special attention where it matters most while ensuring everything else is accounted for efficiently. Mastering this balance is key for effective crawl budget optimization. If you want to dive deeper, we have a whole guide on crawl budget optimization strategies you should check out.

By matching your recrawl request method to the scale of your content update, you maximize your efficiency. A single page gets immediate, focused attention, while a site-wide change gets broad, comprehensive coverage.

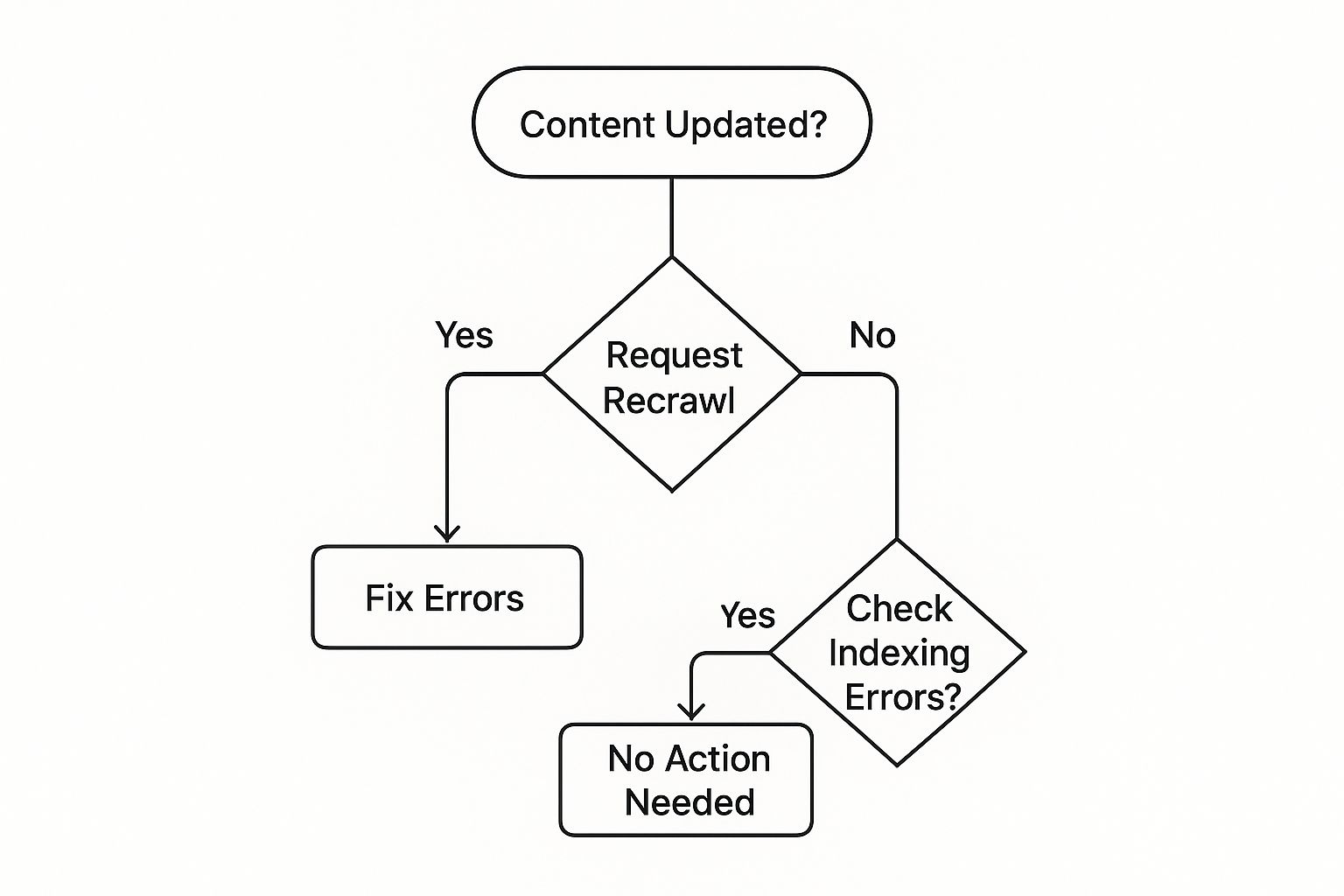

To help you decide which path to take, it’s helpful to have a quick reference guide.

Ultimately, the goal is to make a smart choice that gets your changes recognized without wasting time or crawl budget. This decision tree visualizes the simple logic behind when to act.

As the graphic shows, a recrawl request should be your go-to move right after any significant content update. It’s a core part of proactive, hands-on site management.

Requesting a recrawl is completely pointless if you're just asking Google to look at a broken or inaccessible page. Think of it like a pre-flight checklist; you have to verify everything is in order before you ask for takeoff. A failed request not only wastes your time but also burns through Google’s resources.

Submitting a google request recrawl only works if the page is truly ready for prime time. The most common pitfall I see is some kind of technical roadblock that stops Googlebot dead in its tracks. These are often simple, unintentional mistakes that make your request totally useless.

Before you even think about clicking that "Request Indexing" button, you need to run through these critical checks. Seriously, spending a few seconds here can save you weeks of frustration down the road.

Disallow: /blog/ rule will prevent every single post in that folder from being crawled.noindex tag in the page’s <head> section. It’s incredibly easy to forget to remove this after pushing a page from a staging environment to the live site. We've all been there.Key Insight: Google won't index a page if you're simultaneously sending it signals not to. A recrawl request can't override a noindex directive or a block in your robots.txt file.

Beyond the technical stuff, content quality is a huge factor. Submitting thin, low-value, or duplicate content is just a waste of a request. Google's algorithms are more than smart enough to recognize poor content, and asking for a recrawl won't magically change its mind.

If you find yourself wrestling with these kinds of problems, it’s worth taking a step back to figure out the root cause. You can learn more about how to diagnose these tricky situations in our detailed guide on common website indexing issues.

Even with the right tools in hand, a few questions always seem to pop up around the google request recrawl process. Let's walk through the most common ones I hear, so you can manage your site’s visibility with a bit more clarity and confidence.

This is the million-dollar question, and the honest answer is: it depends. I’ve seen requests get processed in a few hours, and I've seen others take a few weeks. It's a real mix.

When you submit a URL through Search Console, you're essentially telling Google, "Hey, look at this!" which does give it a priority bump. But other factors are still at play, like your site's overall authority, how quickly your server responds, and the quality of the content itself. There's just no guaranteed timeline.

If it's been a week or two and your page is still nowhere to be found, don't just mash the resubmit button. Your first move should be to run it through the URL Inspection tool one more time. It's easy to miss a technical snag, like an accidental "noindex" tag.

If everything looks technically clean, the problem might be how important Google thinks your page is. You need to send stronger signals about its value. A couple of ways to do that:

These actions help Google understand that the page is valuable and well-connected within the wider web ecosystem.

Expert Takeaway: A recrawl request gets you in line, but solid SEO fundamentals are what move you to the front. Indexing and ranking are deeply tied to a page's authority and quality.

While you won't get slapped with a penalty for repeatedly requesting the same URL, it’s also completely ineffective. It's a waste of your time and your daily quota.

Google has been clear that hitting the button over and over for the same page won't speed things up. It’s much smarter to save your limited daily submissions for other pages that genuinely need a nudge. A big part of this is making sure your site has an efficient crawl rate to begin with; you can dig into some tips on how to increase your Google crawl rate to help with that.

At the end of the day, remember that mastering technical SEO is the foundation for all of this. The best way to support faster indexing is to keep your site primed for search engines at all times.

Ready to stop manually chasing Google? IndexPilot automates the entire process. By monitoring your sitemap in real-time and automatically pinging search engines after every update, we ensure your new content gets indexed faster, without the manual work. Start your 14-day free trial of IndexPilot today!