Just because you hit "publish" on a new webpage doesn't mean Google will find it. To get your content seen, you often need to proactively request Google to crawl your site. This tells Google's bots to come and discover your new or updated pages, and it's the first real step toward getting indexed and showing up in search results.

Before you can effectively ask Google to visit your site, you have to get a handle on how it discovers content in the first place. Think of the web as a colossal library. Googlebot is its tireless librarian, constantly roaming the aisles, finding new books, and updating its catalog.

This whole operation boils down to two distinct actions:

Getting this distinction is crucial. I've seen plenty of sites where pages get crawled but never indexed. This often happens if Google thinks the content is low-quality, a duplicate of something else, or blocked by a technical command. You can dive deeper into these nuances in our detailed guide on search engine indexing.

Here's a reality check: Google doesn't have unlimited resources. It assigns a finite amount of attention to every website, a concept we call the "crawl budget." This budget determines how many of your pages Googlebot will crawl and how often it comes back.

Your site’s crawl budget isn’t a fixed number. It’s a dynamic allocation that Google adjusts based on your site's health, size, and authority. A site with frequent, high-quality updates will naturally earn a bigger budget and more frequent visits from Googlebot.

Basically, Google has to decide if your site is worth the effort. For big sites or those that update constantly, managing this budget is everything. Things like crawl demand (how popular or fresh your content is) and crawl capacity (how fast your server responds) directly impact how Google prioritizes your pages. For the technical deep-dive, you can check out Google's official documentation on managing large site crawl budgets.

Just launching a site and hoping for the best is a painfully slow path to getting traffic. A brand-new site with no links pointing to it is like an island with no bridges—Googlebot has no obvious way to find it.

This is exactly why you have to actively request Google to crawl your site. By providing clear signals through tools like sitemaps and direct requests, you’re essentially building those bridges. This helps Google find, crawl, and ultimately index your valuable content much, much faster. It's the foundation for every other SEO strategy you'll ever implement.

When you need to get a specific page in front of Google right now, your best bet is to go straight to the source: Google Search Console (GSC). Think of it as your direct line to the search engine. While submitting a sitemap is great for telling Google about your entire site, the URL Inspection tool is what you use for surgical precision on a single page.

Let’s say you just dropped a massive, cornerstone blog post or updated a critical product page. You can’t just sit around and wait for Google to stumble upon it. That could take days, sometimes even weeks. Instead, you can use the URL Inspection tool to give Google a firm, high-priority nudge, letting it know there’s something new and important to check out.

Getting this done is surprisingly simple. Once you’re logged into your Google Search Console property, look for the search bar at the very top of the dashboard. It’s the one that says, "Inspect any URL in..." That's where the magic happens.

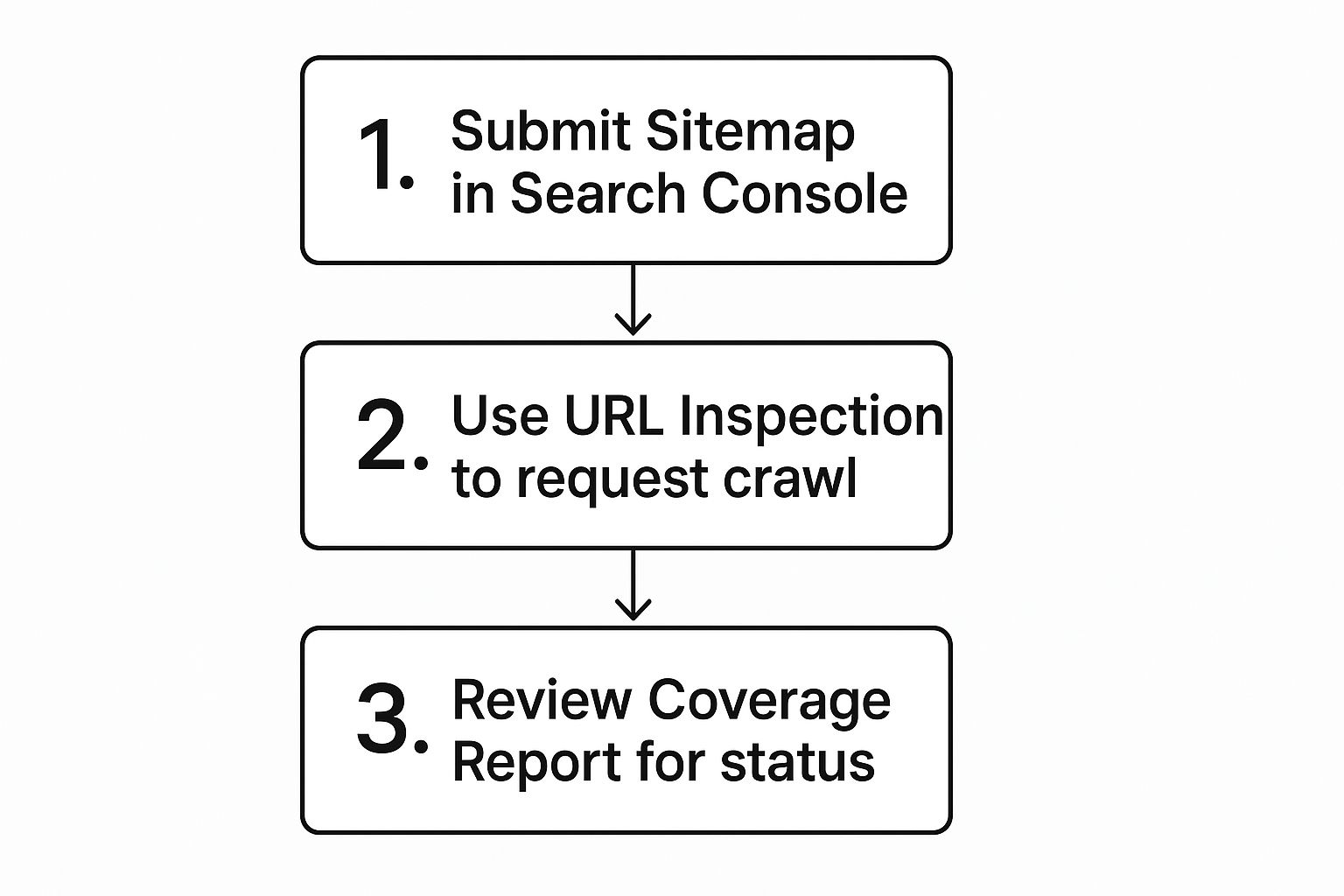

Here’s the process in a nutshell:

That's it. You've sent the signal. Just remember, this is a request, not a command. Google’s algorithms still make the final call on what gets indexed based on factors like quality and overall site authority.

The image below shows the main dashboard of Google Search Console, which is your command center for improving your site's search performance.

This interface is where you can see how Google views your website and take direct steps to boost its visibility.

The real value of the URL Inspection tool isn’t just in hitting the "Request Indexing" button—it’s in understanding the feedback Google gives you. If a page isn't getting indexed, GSC often tells you exactly why.

A successful crawl does not guarantee indexing. The URL Inspection tool is your diagnostic partner, helping you pinpoint issues like "Crawled - currently not indexed" or "Discovered - currently not indexed" so you can fix the root cause.

For example, you might find out a page is accidentally blocked by a noindex tag in the code or a rule in your robots.txt file. Once you fix that technical error, you can come right back to the tool, run a "Test Live URL" to make sure Google can now access it, and then request indexing again. This completes the feedback loop and is an essential step after any technical SEO fix.

If you're looking for more ways to get your site noticed, our guide on how to submit your website to search engines covers other essential methods.

When you've got more than just a handful of pages to get in front of Google, the URL Inspection tool simply won't cut it. Imagine trying to individually submit every single product page after a major e-commerce site launch—it’s just not practical. This is exactly where XML sitemaps come in.

A sitemap is your website's roadmap, handed directly to Googlebot. It efficiently guides the crawler to all your important content in one go, making it the best way to request Google to crawl your site at scale.

Whether you've just launched a brand-new site or finished a massive content overhaul, submitting a sitemap is the most reliable method for telling Google, "Hey, I've got a lot of new and updated pages for you to check out." It’s a direct, efficient signal that helps Google discover and process URLs in bulk, which is far more effective than a page-by-page approach.

These days, most modern CMS platforms like WordPress make sitemap creation almost automatic. Plugins like Yoast SEO or Rank Math will generate and maintain a sitemap for you right out of the box. If you're not on a CMS that handles this, there are plenty of third-party sitemap generator tools available.

Once you have your sitemap's URL (it's usually something like yourdomain.com/sitemap_index.xml), you're ready to submit it.

Just head over to your Google Search Console account and find the "Sitemaps" report in the left-hand menu. You’ll see a field to add your new sitemap. Paste the URL in and hit "Submit."

Pro Tip: Your job isn’t done after you click submit. I always make it a point to check back in Search Console after a day or two. You need to look at the sitemap’s status to confirm Google successfully processed it and see how many of the URLs were actually discovered.

This report is your first line of defense. It will tell you if Google ran into any errors trying to read your file. Catching and fixing these issues quickly is crucial for making sure your pages get crawled. For those who want to really nail this, diving into advanced sitemap optimization techniques can give you a significant edge in crawlability.

This whole process can feel a bit abstract, but it boils down to a few key actions.

As the visual shows, submitting a sitemap and using the URL Inspection tool are the two main ways you can kick off a crawl request. After that, it's all about monitoring the results in your Coverage (now Pages) report.

To help you decide which method is right for your situation, here's a quick breakdown of the primary ways to request a Google crawl.

This table offers a quick comparison of the main methods, highlighting where each one shines and what its limitations are.

MethodBest ForScaleSpeed of RequestSitemap SubmissionNew sites, site-wide updates, large-scale content changesHigh (up to 50,000 URLs per sitemap)Slower (Google processes it on its own schedule)URL Inspection ToolA few high-priority URLs, a new page, or a critical updateLow (one URL at a time)Faster (puts the URL in a high-priority queue)IndexPilotAutomated, large-scale indexing for new & existing contentHigh (manages thousands of URLs automatically)Fast (uses the Indexing API for near-instant requests)

Choosing the right tool for the job is key. For a single new blog post, the URL Inspection tool is perfect. For a thousand new product pages, a sitemap is your go-to. And for ongoing, automated management, a tool like IndexPilot bridges the gap.

Just having a sitemap isn't enough; you need to keep it in good shape. A messy or outdated sitemap can send mixed signals to Google and actually hurt your crawlability.

Keep these essential best practices in mind from day one:

So, you've submitted your sitemap or requested indexing through the URL Inspection tool. Great. But your job isn't done. Now you need to see if Google actually listened.

This is where the Crawl Stats report in Google Search Console becomes your best friend. It’s a direct window into Googlebot's behavior, showing you exactly how it’s interacting with your website. Think of it as a health checkup for your site’s visibility.

This report gives you real, tangible data on how often Googlebot is visiting, what it's finding, and how your server is handling the attention. Understanding this is absolutely crucial for diagnosing those sneaky technical issues that can quietly sabotage your SEO performance.

When you first open the Crawl Stats report, you'll see a few charts. They might look a bit intimidating, but they really boil down to a handful of critical metrics that tell a powerful story about your site's crawlability.

These are the numbers that matter most:

The real magic of this report is in watching the trends. A sharp spike in crawl requests right after you publish a new content hub? That's fantastic. It means Google sees your new stuff and is eager to check it out.

But what if you launch a new section and the crawl activity stays flat? That’s your signal that something is wrong—Google might not be finding your new URLs.

This report gives you a 90-day window into Googlebot’s activity. With Google's index containing roughly 400 billion documents as of 2025, getting crawled efficiently is everything. You can learn more about what influences Google's crawl frequency on boostability.com.

Don’t just glance at the overview. Dig deeper. The report breaks down crawl requests by response type. This lets you quickly spot an increase in server errors (5xx) or "not found" errors (404s) that are chewing through your crawl budget for no reason.

And always, always check the breakdown by Googlebot type. In a mobile-first indexing world, you need to see that the Googlebot Smartphone agent is crawling your site often and successfully. If the desktop bot is still doing most of the work, it might point to problems with your site's mobile experience that you need to resolve.

This data moves you from guessing to knowing, allowing you to make precise, data-driven improvements.

While asking Google to crawl your site is a good short-term tactic, the real win is building a site that Google wants to visit on its own. Instead of constantly knocking on Google’s door, your goal should be to create a site that has a permanent open invitation.

This long-term strategy is all about proving your site's value and activity. When you do that, you naturally climb higher in Google's massive crawl queue. The best way to signal that your site is a dynamic, important, and trustworthy resource is to make it incredibly easy for Googlebot to find fresh, valuable content. Once it learns your site is a reliable source of new information, it will come back more often without you having to ask.

Think of your website's internal links as a road map for Googlebot. A sharp internal linking strategy creates clear pathways from your high-authority pages—like your homepage or a popular cornerstone article—directly to your newest content. This is one of the most powerful (and most overlooked) ways to speed up discovery.

For example, say you just published a brand-new blog post. If you immediately add a link to it from a relevant, high-traffic page that Google already crawls every day, you’ve just built an express lane for Googlebot. A simple action like this can get a page found and indexed in hours, not days or weeks.

A single, well-placed internal link from a powerful page can be far more effective than manually submitting a URL. It doesn't just pass authority (or "link equity"); it directly shapes how Google crawls your entire site.

Google loves freshness. A site that regularly pushes out new, high-quality content is essentially training Googlebot to check back often. It’s like a daily newspaper subscription—if a new edition comes out every morning, you learn to look for it. The same logic applies to your website.

This doesn't mean you have to churn out multiple articles every single day. The secret ingredient is consistency. Whether your rhythm is once a week or three times a week, sticking to a predictable schedule signals to Google that there will always be something new to find. This habit helps build a healthier crawl budget over time. And for those times when you need even faster results, you can look into options for instant indexing to complement your content schedule.

If internal links are the roads you build yourself, backlinks are the highways that other respected sites build to you. When a reputable, high-authority website links to your content, it sends a massive signal to Google that says, "Hey, this page over here is important and trustworthy."

Every quality backlink serves as another discovery path for Googlebot. Even better, it lifts your site’s overall authority, which directly impacts your crawl budget and priority. The more trusted your site becomes, the more resources Google will dedicate to crawling it. This creates a fantastic positive feedback loop: higher authority leads to more frequent crawls, which leads to faster indexing.

This is more important than ever. The web is seeing a huge spike in crawling activity. Recent data from Cloudflare shows that Googlebot crawl traffic shot up by nearly 96% in just one year, a trend that lines up with the rollout of new AI search features. You can dig into the specifics in this analysis of bot traffic trends on cloudflare.com. This explosion in crawling makes it critical for your site to be as authoritative and easy to navigate as possible.

Navigating the world of crawl requests can bring up a lot of "what ifs" and "why isn't this working?" moments. Even after you’ve done everything right to get Google to crawl your site, you might have some lingering questions.

Let’s clear up some of the most common ones I hear from site owners. Understanding these finer points can make all the difference between frustration and success.

This is the million-dollar question, and the honest answer is: it varies. After you hit that request button, a crawl could happen in a few hours, or it might take several weeks. There’s just no fixed, guaranteed timeframe.

Generally, using the URL Inspection tool for a single, high-priority page is your fastest bet. It essentially pushes your URL into a priority queue. But even then, the ultimate speed depends on factors outside your direct control, like your site's overall authority, its assigned crawl budget, and how busy Google's own systems are at that moment.

A successful crawl is just the first step—it doesn't automatically equal indexing. This is a common frustration I see all the time. If Google has crawled your page but refuses to add it to its index, it’s almost always because of an underlying quality or technical issue.

Here are the most frequent culprits I run into:

noindex tag hiding in your page's HTML <head> section or in the HTTP headers. This explicitly tells Google to stay away.robots.txt file could be preventing Google from accessing the page, which blocks both crawling and indexing.Your best friend here is the URL Inspection tool. It will often report the specific reason, like "Crawled - currently not indexed," and give you crucial clues about what you need to fix to get the page into search results.

Absolutely not, and you shouldn't. It's so important to use manual crawl requests strategically. Save them for the big stuff.

Think of it this way—use them only for significant events:

For small updates like fixing a typo, swapping an image, or rewording a sentence, it’s much better to let Google find the changes during its next scheduled crawl. Overusing the "Request Indexing" feature gives you no extra benefit, and Google even has quotas on how often you can use it.

For other methods beyond manual requests, check out our guide on finding a reliable and free pinging service to notify search engines of your updates.

Stop waiting for Google to find your content. IndexPilot automates the entire process by monitoring your sitemap and instantly notifying search engines of new or updated pages. Ensure your content gets discovered and indexed faster, driving more traffic to your site. Start your 14-day free trial of IndexPilot today.