If your pages aren't showing up on Google, it’s usually because of specific roadblocks preventing its crawlers from finding, understanding, or valuing your content. The most common culprits are technical glitches like 'noindex' tags, crawlability barriers in your robots.txt file, a messy internal linking structure, or content that Google simply deems low-quality.

Think of it this way: Google is getting much more selective and has stopped indexing everything it finds. It has a job to do, and if you make that job harder, your pages get left behind.

Discovering your website is invisible on Google is a deeply frustrating experience, especially after pouring time and money into creating content. But indexing issues are rarely random; they're symptoms of underlying problems that break the search engine's simple process: crawl, index, and rank. If you're failing at step two, something is definitely broken.

And it's not just about simple technical errors anymore. While a misplaced noindex tag or a bad robots.txt rule are classic troublemakers, the reasons for indexing failures have gotten more nuanced. Google now aggressively prioritizes quality and efficiency, which means it carefully manages its crawl budget—the number of pages it’s willing to crawl on your site in a given period.

If your site is new or has low authority, Google might not crawl it often enough to find your new pages. Even for established sites, certain problems can bring indexing to a screeching halt.

To get to the root of the problem, you need to understand the most frequent reasons a page fails to get indexed. I've seen these issues pop up time and time again.

Here's a breakdown of the usual suspects:

Issue TypeWhat It MeansImpact on Your SEOCrawl BlocksYour robots.txt file is explicitly telling Googlebot not to visit certain pages or directories.These pages will never be seen by Google and therefore cannot be indexed or ranked. It's like putting a "Do Not Enter" sign on your door.'Noindex' DirectivesA meta tag or X-Robots-Tag on the page instructs search engines not to add it to their index.Google will find the page but will respect the directive, immediately excluding it from search results. A common, and costly, mistake.Low-Quality ContentThe page offers little unique value, is too thin, or is a near-duplicate of another page on your site or elsewhere.Google may choose not to index it to avoid cluttering its results with unhelpful content. It's a waste of their resources.Poor Site StructureA lack of internal links makes it nearly impossible for crawlers to discover the page from other parts of your site.So-called "orphan pages" often get missed because there's no clear path for Googlebot to find them. They're lost islands.

These are the foundational roadblocks you need to rule out first. A huge part of getting indexed is understanding how search engines crawl and interpret links. For a great deep dive, Craig Murray's guide on link indexing explained is an excellent resource. It really helps you start thinking about your site from Google's perspective.

One of the most confusing statuses you'll see in Google Search Console is "Discovered - currently not indexed." This means Google knows your page exists but has decided not to crawl and index it… yet. This can happen for all sorts of reasons, from a perceived lack of page quality to those pesky crawl budget limitations.

Key Takeaway: Being "discovered" is not a guarantee of being indexed. Google is actively deciding if your page is worth the resources to crawl and store. Your job is to convince it that the answer is yes.

Improving your site's overall health, authority, and internal linking is what moves pages from this limbo into the actual index. But before you start tearing your site apart, you need to confirm the basics. You can learn exactly how to check if your website is indexed with a few simple methods. This simple check ensures you're working with accurate information before you start troubleshooting.

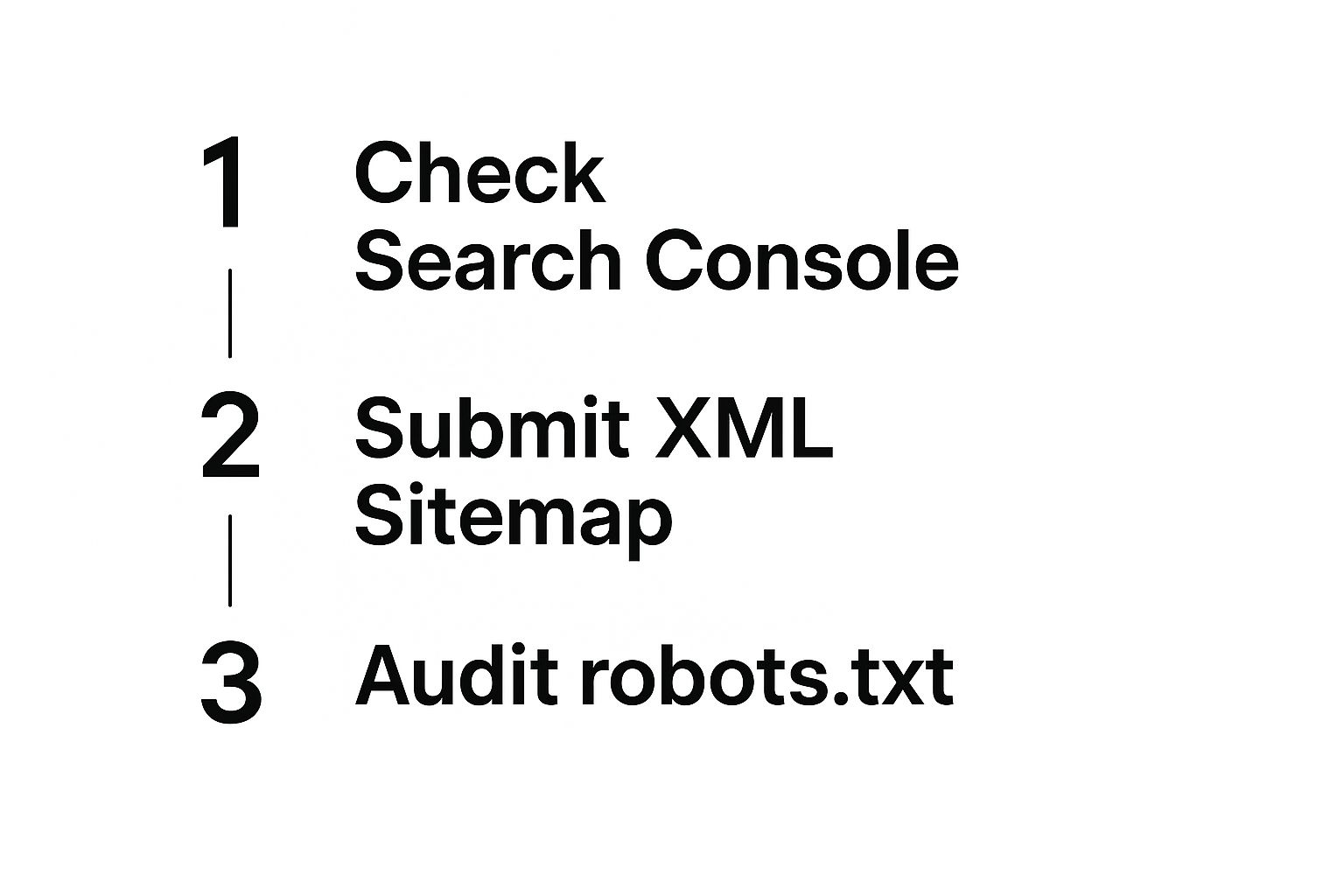

Before you can fix what's broken, you have to know why it’s broken. When it comes to website indexing, guesswork is your worst enemy. Your first and best source of truth is Google Search Console (GSC)—it’s packed with data, as long as you know where to dig.

Forget the main dashboard for a minute. Your real investigation begins in the Page Indexing report, which you’ll find under Indexing > Pages. This is where Google gives you the unvarnished truth about how it sees your site, splitting everything into two buckets: indexed and not indexed.

We’re heading straight for the "Not indexed" tab. This isn't just a list of failures; it's a diagnostic report from Google telling you exactly why certain pages aren't making the cut. Ignoring this is like a mechanic ignoring the check engine light.

Inside that report, you’ll find specific reasons for non-indexing. Two of the most common—and most frequently confused—are "Crawled - currently not indexed" and "Discovered - currently not indexed." They sound similar, but they tell completely different stories about your site's health.

Key Takeaway: "Crawled" issues are about what's on the page. "Discovered" issues are about how Google gets to the page. Nail this distinction, and you’re already halfway to a solid action plan.

Let’s say you just refreshed a bunch of old articles and suddenly see a spike in "Crawled - currently not indexed" pages. That's a huge red flag that Google thinks your new content is low-quality. On the other hand, if you launch a new section of your site and those pages pile up under "Discovered," it probably means they’re orphaned and you haven't built enough internal links to signal their importance.

While the Page Indexing report gives you the 30,000-foot view, the URL Inspection Tool is your microscope. When you’ve identified a specific, high-value page that's not indexed, this is your next click. Just copy the URL and paste it into the search bar at the very top of GSC.

This tool gives you a detailed breakdown for that single URL, covering:

Imagine you just launched a new e-commerce category, but it’s getting zero organic traffic. The Page Indexing report flags these pages as "Not indexed" because of a "Redirect error." By plugging one of the product URLs into the Inspection Tool, you might discover an old, forgotten redirect rule is mistakenly sending all the new pages back to the homepage. Without this tool, you’d be chasing ghosts.

Of course, any good diagnosis needs a baseline. A crucial first step is to accurately count the pages on your website and see how that number stacks up against what GSC reports as indexed. If there’s a massive gap between the two, you’ve just found your first major clue that something is wrong on a site-wide scale.

Once you've used Search Console to figure out what's broken, it's time to roll up your sleeves and fix the technical gremlins causing the problem. These issues are the invisible walls blocking Google’s crawlers from reaching your content. If you want sustainable SEO results, tackling them isn't optional—it's essential.

The good news is that most indexing problems come from a small handful of common, high-impact technical errors. Once you know where to look, they're usually straightforward to fix. Let's walk through the usual suspects I see time and time again.

The first place Googlebot ever looks on your site is your robots.txt file. It’s a simple text file, but it holds a lot of power. It gives search engines direct instructions on which parts of your website they are allowed to visit. Just one misplaced character in this file can accidentally tell Google to ignore your entire site.

A classic mistake is a line that reads Disallow: /. This single command tells all crawlers to stay away from every page. I once worked with an e-commerce client who was tearing their hair out because a new product line wasn't getting indexed. It turned out a developer had added that exact rule during a site update and forgot to remove it. The site was effectively invisible to Google for weeks.

Always, always double-check that your robots.txt file is correctly configured. Make sure it allows access to all your important pages and assets, like your CSS and JavaScript files.

This initial check is the foundation of any good technical audit. It helps you quickly confirm the basics are in order before you dive into more complex problems.

Canonical tags (rel="canonical") are your best friend for managing duplicate content. They tell search engines which version of a page is the "master copy" that should get all the credit and be indexed. But when they're misconfigured, they create absolute chaos for crawlers.

Imagine you have a product page with two URLs—one for desktop and an AMP version. If they both incorrectly point to each other as the canonical, or if they point to a completely different page, Google gets confused. It doesn't know which one to index, so it often just gives up and ignores both.

I also see this happen a lot on international sites that use hreflang tags. If your US page (en-us) and your UK page (en-gb) both have a canonical tag pointing only to the US version, you can kiss your UK rankings goodbye. Your UK page will likely never get indexed, a classic case of self-sabotage that directly hurts your global reach.

Your crawl budget is the finite amount of resources Google is willing to spend crawling your site. If your site is a mess, you'll waste that budget on unimportant pages, leaving your most valuable content sitting in the dark, undiscovered.

Pro Tip: Think of crawl budget like a daily allowance. If you spend it all on low-value pages—like endless tag archives or faceted navigation URLs—you won't have any left for the pages that actually drive business.

Here are two key areas to focus on to make every crawl count:

By cleaning up these technical signals and building a logical site structure, you're essentially giving Google a guided tour of your most important content. For those ready to take it a step further, our guide on how to increase your Google crawl rate offers advanced techniques to get your pages discovered even faster.

Getting a page indexed used to be the finish line. Now, it's just the starting block. The real challenge is keeping it there.

Years ago, you could publish almost anything, and Google would eventually find a spot for it. That reality has completely flipped. Today, search engines are aggressively pruning their indexes, kicking out pages they see as unhelpful, thin, or just plain redundant.

This means even if your page got indexed and ranked, it’s not permanently safe. If Google decides your content's value has dropped, its indexing status can drop right along with it. Getting a handle on this is the key to avoiding painful deindexing headaches later on.

When we talk about "unhelpful content," it’s not just about typos or bad grammar. It’s about failing to meet the searcher's needs or provide any real, unique value. Google has gotten incredibly good at sniffing out content that exists just for the sake of existing.

Here are a few real-world examples of content that's on the chopping block:

A sudden wave of deindexing hit the web in late May 2025, with many site owners reporting huge drops in their indexed page counts. One famous case involved a recipe site where dozens of "definition" style posts—easily replicated by AI—were purged from Google's index. It was a clear signal of the search engine's focus on unique value. You can dig into a detailed analysis of which pages Google started deindexing during this period.

The best defense against deindexing is a good offense. Don't sit around waiting for your traffic to flatline. Regularly auditing your existing content helps you spot and strengthen pages that might be at risk.

A great place to start is with pages showing low engagement metrics. Think high bounce rates, short time on page, and zero conversions. These are red flags telling you the content isn't hitting the mark with your visitors.

Once you have a list of potential problem pages, you need to ask some tough questions:

If the answer to any of these is "no," that page needs work. The goal is to turn every piece of content into an asset that Google wants to keep indexed. To better understand the mechanics behind this, check out our complete overview of how search engine indexing works. Boosting your content quality is a direct investment in its long-term survival.

Waiting around for Google to discover your new content is a recipe for falling behind. If you just publish and hope for the best, you’re leaving money on the table. The key is to take control of the indexing process yourself.

Being proactive isn't just about fixing existing indexing problems—it's about building a system that prevents them in the first place. This ensures your latest pages get seen, crawled, and ranked much faster. It's about creating a framework that screams value to Google from the second you hit "publish," guiding its crawlers directly to your most important content and dramatically shortening the time it takes to start driving traffic.

Think of an XML sitemap as a roadmap you hand-deliver to Google. It shows the search engine exactly where to find every important page on your site. Without one, Googlebot is left to wander around, discovering your pages by following links one by one—a slow and often incomplete process, especially for brand-new content.

But just having a sitemap isn't enough. It needs to be clean. A pristine sitemap only includes your valuable, indexable URLs—pages that return a 200 OK status code. Including redirected pages, URLs blocked by robots.txt, or pages marked noindex just sends mixed signals and wastes Google's precious crawl budget.

A classic mistake I see all the time is leaving old, redirected URLs in a sitemap after a site migration. This tells Google to waste its time crawling a page that doesn't even exist anymore. Get in the habit of auditing your sitemap regularly to make sure it’s a perfect reflection of your live, valuable content. Once it's clean, submitting it via Google Search Console is like sending a direct invitation for Googlebot to come crawl your best stuff.

For a full walkthrough on getting this foundational step right, check out our guide on how to submit your website to search engines.

Internal links are the superhighways of your website. They connect your pages, pass authority (or "link juice"), and signal to Google which content you consider most important. A new blog post with zero internal links pointing to it is what we call an orphan page—an isolated island that crawlers might struggle to find, or never find at all.

Every time you publish a new page, your first thought should be: how does this connect to the rest of my site?

Think of it this way: linking from one of your most powerful pages to a new article is like giving that new article a strong personal recommendation. You're essentially telling Google, "Hey, this new page is important and highly relevant to this other piece of content you already know and love." This one practice can be one of the most powerful things you do to speed up indexing.

While a solid sitemap and smart internal linking will handle most of your indexing needs automatically, sometimes you need to give Google a little nudge. The "Request Indexing" feature in Google Search Console's URL Inspection tool is your direct line to the mothership for high-priority pages.

This is especially handy for:

noindex tag or correct a major technical error, requesting a re-crawl tells Google to come back and re-evaluate the page with the fix in place.While Google is improving its processes, getting indexed is far from guaranteed. A recent study of over 16 million pages found that even with improvements, a staggering 61.94% of pages remained unindexed. However, for the pages that did get indexed, 93.2% were added within six months, showing that when Google decides to index, it often acts relatively quickly. Read the full research about these indexing rate findings on Search Engine Journal.

This data really drives home the need for a proactive approach. Manual requests are useful, but they're rate-limited and just not scalable for a large site. For truly time-sensitive content like job postings or live event streams, the Indexing API is a much more powerful and automated solution that tells Google and Bing about new content almost instantly. This is precisely what tools like IndexPilot automate, ensuring your most urgent content never gets left in the waiting room.

Even when you feel like you have a solid grasp of technical SEO and content strategy, specific indexing questions always seem to pop up. The SEO world is notorious for its "it depends" answers, but sometimes you just need a straight-up explanation. Let's tackle some of the most common ones I hear all the time.

Getting clear on these queries helps turn that frustrating confusion into a clear path forward. We'll cut through the jargon and get right to what you actually need to know.

This is the million-dollar question, isn't it? Unfortunately, there's no magic number. The time it takes for Google to index a new page can swing wildly from a few hours to several weeks—or even longer in some frustrating cases. A few key factors are at play here.

For example, a new article on a major news site like The New York Times might get indexed within minutes because Google trusts the domain and crawls it constantly. On the flip side, a page on a brand-new website with zero authority or backlinks could languish in the "Discovered - currently not indexed" status for what feels like an eternity.

Your site's overall health and reputation are the biggest influences. The timeline really comes down to:

Key Insight: Think of indexing speed as a reflection of trust. The more Google trusts your site to consistently produce high-quality, valuable content, the more often it will send its crawlers and the faster it will index new pages. Patience helps, but proactive strategies make the real difference.

I see people use "crawling" and "indexing" interchangeably all the time, but they are two completely different steps in how a search engine handles your content. Getting this right is fundamental to fixing any website indexing issues.

Crawling is simply the discovery process. Googlebot, the web crawler, follows links from one page to another across the internet to find new and updated content. Think of it like a librarian roaming the stacks to see if any new books have been added.

Indexing, on the other hand, is the analysis and storage part. After a page is crawled, Google analyzes everything on it—the text, images, videos—and stores that information in its massive database, the index. A page is only eligible to appear in search results after it has been indexed.

Here’s the catch: a page can be crawled but never indexed. This happens when Google finds the page but decides it isn't good enough to store. Common culprits are low-quality content, duplicate pages, or a "noindex" tag telling it to stay away. Your goal is always to get every important page both crawled and indexed. For a deeper dive, our guide on website indexing breaks down the entire process.

It’s one of the most common and maddening scenarios in SEO: your site is mostly healthy, but one important page just refuses to show up in Google. When this happens, it's time to put on your detective hat and do some targeted digging.

Your first stop, without question, should be the URL Inspection Tool in Google Search Console. Just paste the stubborn URL into the search bar at the top. This tool is your direct line to what Google knows about that specific page. It will tell you:

noindex tags, robots.txt blocks, or server errors (like a 404).If the tool gives you the all-clear on the technical front, the problem is almost certainly related to quality or perceived importance. Is the content a bit thin or unoriginal? More importantly, are there any internal links pointing to it? A page with zero internal links is an "orphan," and Google will almost always assume it's unimportant.

The fix is often simple: go to a few of your relevant, high-authority pages and add contextual internal links pointing to the problem page. That one action can provide the signal of importance Google needs to finally prioritize a crawl and get it indexed.

Don't let indexing delays hold back your content's potential. IndexPilot automates the entire submission process, using real-time sitemap monitoring and the IndexNow protocol to get your new and updated pages seen by Google and Bing faster. Stop waiting and start ranking.