Sitemap optimization is all about strategically creating and managing your XML sitemap to point search engine crawlers toward your most important content. It’s way more than just generating a file and forgetting about it. Real optimization means curating a lean, focused list of your best URLs to boost crawl efficiency and get your pages indexed faster.

Think of it as a direct line of communication with Google, making sure your best pages don't get lost in the shuffle.

This screenshot right from Google's own documentation shows the basic XML structure. You can see the loc (URL), lastmod (last modified date), changefreq, and priority tags. While Google has openly said they mostly ignore changefreq and priority these days, the loc and lastmod tags are still absolutely critical for getting your site crawled effectively.

I like to think of a website as a massive library and Googlebot as a busy librarian with no time to waste. Without a good catalog, that librarian is just wandering the aisles, hoping to stumble upon the best books. Your sitemap is that catalog. It points them directly to your most important, recently updated content.

This isn't just some technical box to check; it’s a core part of a strong SEO foundation. When you take the time to optimize your sitemap, you’re directly shaping how search engines see and interact with your website.

A clean, strategic sitemap has a real, tangible effect on your SEO. It’s not about getting every single page crawled—it’s about getting the right pages crawled and indexed without delay. This smart approach conserves your crawl budget, which is the finite number of pages Google is willing to crawl on your site in a given period.

A common mistake I see is the "bigger is better" mindset with sitemaps. The truth is, a focused sitemap with only your high-value, indexable URLs is far more powerful. It tells search engines to stop wasting time on fluff and focus on the content that actually drives rankings and traffic.

The benefits of trimming the fat are pretty clear:

This process seriously improves your SEO by making sure your important pages get indexed, which can be the difference-maker in competitive niches. Submitting a sitemap gives crawlers a guide, and using lastmod tags signals when content has been updated, helping them prioritize what to look at first.

To give you a clearer picture, here’s a breakdown of how a well-tuned sitemap directly influences key SEO metrics.

As you can see, the benefits go far beyond simple page discovery. A smart sitemap strategy is a foundational element that supports your entire SEO program.

At the end of the day, sitemap optimization is what connects your content creation efforts to actual search visibility. If a page isn't indexed, it simply can't rank—it doesn't matter how amazing the content is. By giving search engines a clear, clean roadmap, you take the guesswork out of the equation.

This is a non-negotiable first step in any solid strategy for improving your search engine indexing performance. A healthy sitemap ensures your best work gets the visibility it deserves, giving it the best possible shot at being seen, indexed, and ultimately, ranked for the keywords that grow your business.

You can't fix what you can't see. Before you dive into optimizing your sitemap, you need a clear, honest baseline of where it stands right now. This isn't about getting lost in code; it's a practical audit to find the crawl-wasting errors and hidden opportunities that are holding you back.

First things first: you need to find your sitemap. The easiest place to look is usually your robots.txt file, which lives at yourdomain.com/robots.txt. Most sites include a line there that points directly to the sitemap’s location, making it a quick find for both you and the search engines.

Once you have that URL, it's time to dig in.

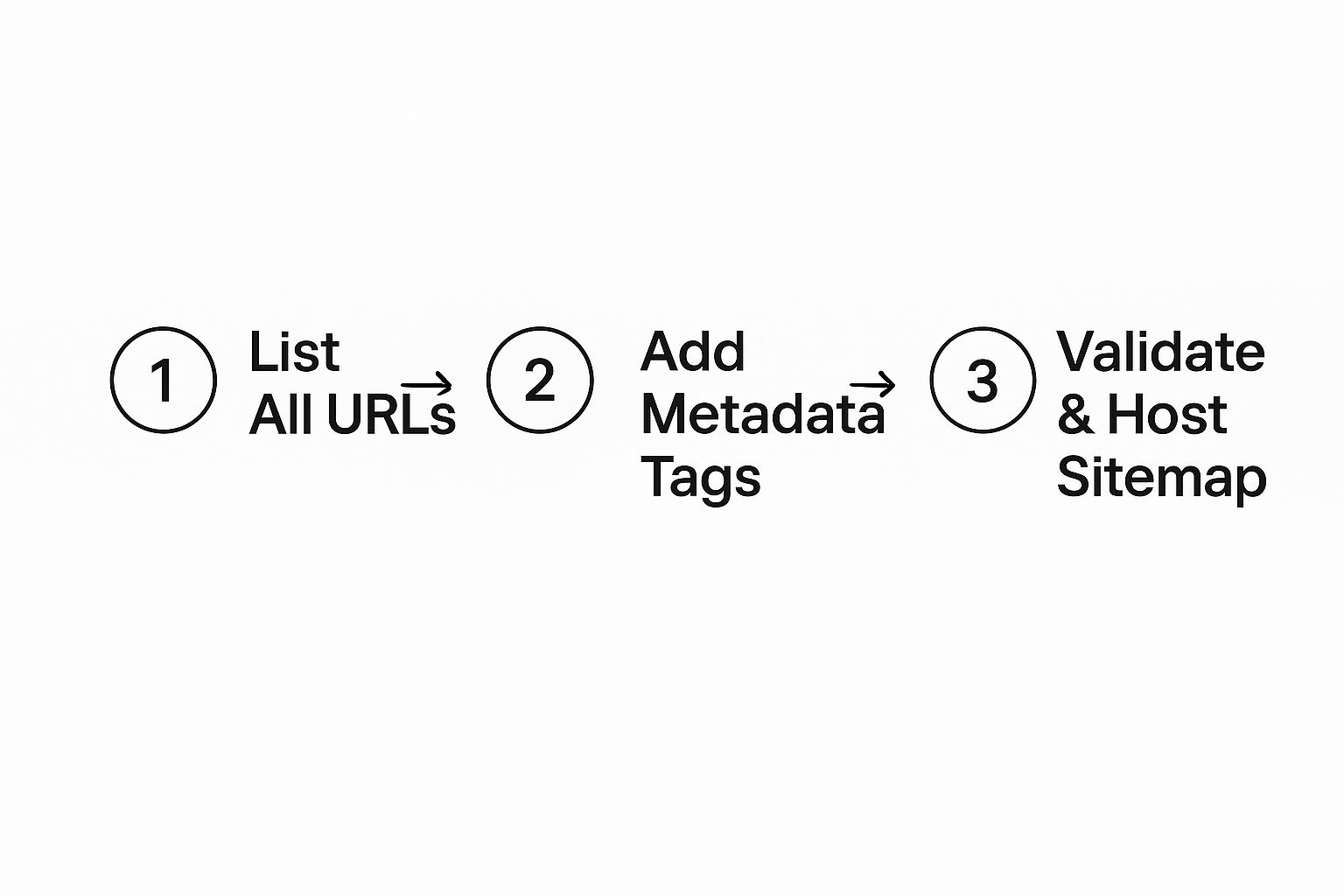

The diagram below breaks down the basic flow for creating a clean sitemap, from gathering your URLs all the way to final validation.

As you can see, a good sitemap isn’t just a raw export of every page on your site. It's a deliberately curated list, enriched with data and properly quality-checked.

A bloated sitemap is one of the most common—and damaging—issues I see. It actively wastes your crawl budget by sending Googlebot on a wild goose chase to pages that offer zero SEO value. Your job is to trim the fat and give Google a list of only your best, most important URLs.

Start by hunting down these usual suspects:

rel="canonical" tag pointing somewhere else, it has no business being in your sitemap. Including it just creates confusion.A "clean" sitemap is an exercise in exclusion. The pages you choose not to include are just as important as the ones you do. Think of it as giving Google a curated tour of your best assets, not an overwhelming phonebook of every URL.

Just by removing this junk, you make your sitemap leaner, more efficient, and far more helpful to search engines.

Manual checks are a good starting point, but for a truly thorough audit, you’ll need some specialized tools. They can crawl your sitemap and cross-reference every URL with its live status, saving you hours of tedious work.

Google Search Console (GSC) is your best friend here. The "Sitemaps" report is your command center. It shows you exactly how many URLs Google found in your file versus how many it actually indexed. A big gap between those two numbers is a major red flag that something is wrong.

From there, head straight to the Page Indexing report. GSC breaks down "Why pages aren't indexed" with specific reasons like "Page with redirect," "Not found (404)," or "Excluded by ‘noindex’ tag." This isn't just data; it's a direct to-do list from Google. See a bunch of pages excluded by 'noindex'? Time to investigate if those tags were added by mistake.

Screaming Frog is another absolute powerhouse for this. You can feed it your XML sitemap file and tell it to crawl just those URLs.

Once the crawl finishes, it's incredibly easy to filter for non-200 status codes (like 404s and 301s) or check the "Indexability Status" column to find every non-indexable page. This lets you quickly pinpoint every problematic URL in your sitemap and export a list for removal. Combining what you find in GSC and Screaming Frog gives you a complete picture of your sitemap's health.

For more hands-on tutorials and advanced SEO strategies, you can find a wealth of information by checking out the guides on the IndexPilot blog. Performing a regular sitemap health check, maybe once a quarter, ensures your optimization efforts pay off and your site stays in peak condition for crawlers.

Throwing all your URLs into one giant sitemap is one of the most common mistakes I see. Sure, it technically lists your pages, but it offers zero strategic value. For any site with a bit of complexity, this one-size-fits-all approach is a recipe for missed indexing opportunities and a real headache when you need to diagnose problems.

The real power comes from being smart about how you structure your sitemaps. Instead of a single file, the best practice is to break your site down into smaller, logical chunks using multiple sitemaps. This isn't just for massive sites—even a medium-sized e-commerce store or a growing publication can benefit hugely from this kind of organization.

The key to managing all these individual sitemaps is the sitemap index file. Think of it as a sitemap for your sitemaps. It’s a simple file that doesn't list any page URLs itself. Instead, it just points to the locations of your other, more specific sitemaps.

You submit this single index file (often sitemap_index.xml) to Google Search Console, and Google takes it from there, discovering and crawling each individual sitemap you've listed.

This approach gives you two massive wins:

Suddenly, you can isolate indexing performance for different sections of your site. This structure lets you answer critical questions that are simply impossible to tackle with a single, messy sitemap.

How you decide to split your sitemaps should be driven entirely by your site's architecture and your business goals. The whole idea is to group URLs in a way that gives you meaningful, actionable data.

Here are a few practical ways I’ve seen this work wonders:

products.xmlcategories.xmlblog.xmlstatic-pages.xml (for your About Us, Contact, etc.)articles-news.xmlarticles-evergreen.xmlauthors.xmlvideo.xmlfeatures.xmlsolutions.xmlblog.xmlresources.xml (for case studies, whitepapers)Once you set up your sitemaps this way, the "Sitemaps" report in GSC transforms from a basic validation check into a powerful dashboard. You can click right into products.xml and see its specific indexing coverage, then compare it directly to blog.xml.

All of a sudden, you can see if your new product pages are being indexed much slower than your blog posts. This kind of insight allows you to investigate potential issues—like thin content on product pages or a technical hiccup—with surgical precision. This is proactive SEO, not reactive guesswork.

Actually creating the sitemap index file is pretty straightforward. The XML format looks a lot like a standard sitemap, but instead of using <url> and <loc> tags for pages, it uses <sitemap> and <loc> to point to other sitemaps.

Here’s a barebones example of what a sitemap_index.xml file looks like:

https://www.example.com/sitemap-products.xml2024-07-28https://www.example.com/sitemap-blog.xml2024-07-30

Each <sitemap> entry points to one of your individual sitemap files. The <lastmod> tag here should reflect when that specific sitemap file was last modified, giving search engines a hint about what's fresh.

This organized structure is a foundational piece of advanced SEO. It scales as your site grows, provides invaluable data, and helps you guide Google’s attention far more effectively. For businesses looking to put this on autopilot, you can explore automated sitemap management features that keep your sitemaps perfectly structured and updated in real time.

If you’re still manually creating and updating your sitemap, you’re setting yourself up for mistakes and missed opportunities. The real goal here is to build a reliable, 'set it and forget it' system. True automation means your sitemap dynamically updates the very moment you publish a new blog post, tweak a product page, or add a new service.

Thankfully, most modern content management systems (CMS) make this incredibly simple. If you're on WordPress, popular SEO plugins like Yoast or Rank Math handle all the heavy lifting, automatically generating and maintaining your XML sitemap for you. Any time you add or edit content, the sitemap instantly reflects the change with the correct URL and <lastmod> date—no manual work needed.

Platforms like Shopify and Webflow have similar functionality baked right in, ensuring your sitemap is always an accurate mirror of your site’s indexable content. This is the cornerstone of a low-maintenance, high-impact SEO strategy.

Just having a sitemap isn't enough; search engines need to know where to find it. While you can (and should) submit it directly, the very first thing you should do is add it to your robots.txt file. This file is one of the first places crawlers look when they visit your site.

Adding a simple directive in your robots.txt file acts as a clear signpost for all bots, not just Google’s.

It’s as easy as adding this one line:Sitemap: https://www.yourdomain.com/sitemap_index.xml

This tells crawlers exactly where to find your map, ensuring they can easily discover all your important URLs. It’s a simple but absolutely critical step.

While robots.txt helps with auto-discovery, you should still proactively submit your sitemap directly to search engine webmaster tools. This puts your site map squarely on their radar and, more importantly, gives you access to invaluable performance data.

The two most important places to submit are:

Submitting your sitemap to these tools does more than just tell them where it is; it unlocks a powerful diagnostic dashboard. You can see when it was last read, how many URLs were discovered, and crucially, if Google or Bing ran into any errors.

This direct feedback loop is essential for troubleshooting indexing problems later on.

For anyone who wants their content indexed as fast as humanly possible, there’s a more advanced tactic: the IndexNow API. This is a protocol backed by major search engines like Bing and Yandex that lets you instantly notify them the moment a URL is added, updated, or even deleted.

Instead of waiting around for crawlers to re-check your sitemap on their own schedule, IndexNow lets you push the update to them in real-time. This can slash indexing time from days to mere minutes—a huge advantage for time-sensitive content like news articles or product launches.

This used to require some technical know-how, but new platforms are making it accessible to everyone. For example, you can see how IndexPilot works to automate this entire process, combining dynamic sitemap monitoring with instant IndexNow pings.

This level of automation ensures your sitemap isn’t just a passive file but an active tool that’s constantly working to get your content seen. By combining automated generation, proper submission, and instant notifications, you create a robust system that maximizes your crawl efficiency and search visibility.

It’s a classic SEO headache. You’ve built a perfect sitemap, submitted it to Google Search Console, and then... nothing. You check the reports only to find a huge chunk of your URLs are stuck in that frustrating limbo: "Discovered - currently not indexed." This gap between what Google knows about and what it actually shows in search results is where many SEO strategies fall flat.

Getting past this hurdle means moving beyond simple submission and into active troubleshooting. You have to learn how to decode the messages Google is sending you through its own tools. More often than not, the issue isn’t the sitemap itself, but a problem with the pages inside it.

Your go-to tool for this investigation is the Page Indexing report in Google Search Console. This report doesn’t just show what is indexed; its real value is in telling you why other pages aren't. Think of it as a direct diagnostic checklist from Google.

Even with a sitemap listing thousands of URLs, you might see only a fraction of them indexed. This is a common challenge. You can spot these gaps by comparing the number of submitted URLs against the indexed pages right in GSC's sitemap report. Common culprits include accidental noindex tags, pages blocked in robots.txt, slow-loading pages, or just plain thin content.

This is what the report looks like—it clearly separates the good from the bad and gives you a starting point.

The report categorizes everything, giving you specific issues like "Page with redirect" or "Excluded by ‘noindex’ tag" to dig into.

Once you start exploring the "Why pages aren’t indexed" section, you'll probably run into a few usual suspects. Each reason points to a different kind of problem, and knowing how to fix them is the core of effective sitemap optimization.

Here's a breakdown of the most frequent issues I see and how to handle them.

noindex tag in a page's HTML <head> or X-Robots-Tag HTTP header is an explicit command telling Google to keep that page out of its index.noindex tag was added by mistake (which happens surprisingly often during site migrations or plugin updates), just remove it. Afterward, use GSC’s "Request Indexing" feature to get Google to recrawl it.robots.txt file can use a "Disallow" directive to stop crawlers from accessing certain pages or entire sections of your site. If an important URL is listed here, Googlebot can't even see it, let alone index it.robots.txt file and review it. If a valuable page is being disallowed, remove or tweak the rule. Just be careful not to accidentally unblock private or sensitive areas of your site.A crucial insight here is that Google is getting much stricter about content quality. Simply having a page exist isn't enough anymore. It has to meet a certain quality threshold to earn its place in the index. Prioritizing content depth and user experience is no longer optional.

For a quick reference, here’s a table of common indexing errors you'll find in Google Search Console and what to do about them.

This table breaks down the most frequent status reports from GSC, what they actually mean, and how you can get things moving again.

Fixing these underlying page problems is the key to turning a "Discovered" URL into an "Indexed" one. This is what a strong website indexing strategy is all about, ensuring your sitemap optimization efforts actually lead to better search visibility.

Even with the best plan, sitemap optimization can bring up some tricky questions. Let's walk through some of the most common ones I hear from clients and in forums. Getting these right will help you make confident, impactful decisions for your site's SEO.

Absolutely not. This is probably the biggest mistake people make. Your XML sitemap should be a highlight reel of your most valuable, SEO-ready pages—not a directory of every single URL on your domain.

When you stuff your sitemap with low-value pages, you dilute the importance of your key content and burn through your crawl budget. Search engines end up wasting time on URLs that have no chance of ranking, pulling focus away from the pages that actually drive traffic and revenue.

Be ruthless about excluding pages like these:

/page/2/, /page/3/, and so on from your blog or category archives. Let crawlers find these naturally.rel="canonical" tag to point somewhere else, including it in the sitemap sends a confusing, mixed signal.robots.txt file or marked with a 'noindex' tag has no business being in your sitemap.Your sitemap is your direct line to search engines. It’s your chance to say, "Hey, out of all the pages on my site, these are the ones that matter." A lean, focused sitemap is a powerful signal of quality.

The real goal here is automation. Ideally, your sitemap should update dynamically the instant you publish, edit, or delete content. Modern CMS plugins, like Yoast SEO for WordPress, handle this beautifully right out of the box.

You only need to submit your sitemap URL to Google Search Console once. After Google knows where your sitemap lives (e.g., yourdomain.com/sitemap_index.xml), its crawlers will check back on their own schedule to look for new updates. The <lastmod> tag is the crucial signal that tells them when a page has fresh content worth re-crawling.

There's no need to manually resubmit your sitemap every time you update a blog post. That's what the automation is for. The only time you might need to resubmit is if you make a huge structural change, like splitting a massive sitemap into a new index file for the first time. Otherwise, trust the process.

This is a great question and a common point of confusion. The easiest way to remember the difference is to think about the audience.

<lastmod> date.While an HTML sitemap is a nice-to-have for users, the XML sitemap is the non-negotiable tool for technical SEO. It's how you communicate directly with search engine bots, and it’s the one you need to optimize.

Honestly? Not really. For years, webmasters used the <priority> tag to try and tell search engines how important one page was relative to others, and <changefreq> to suggest how often it was updated.

But Google has been very clear that they now largely ignore these two tags. Their algorithms have become far more sophisticated. They rely on hundreds of better signals—like the quality and number of backlinks, user engagement, and historical crawl data—to figure out a page's importance and how often to check it.

Your time is much better spent on things that actually move the needle:

<lastmod> tag accurate and up-to-date.Focusing on a clean, accurate, and strategic sitemap will deliver far greater SEO results than messing around with outdated tags.

Stop waiting for search engines to find your new content. With IndexPilot, you can automate your sitemap monitoring and instantly notify Google and Bing of every update using the IndexNow protocol. Ensure your best pages get discovered and indexed in minutes, not days. Start your free 14-day trial and take control of your indexing at https://www.indexpilot.io.