Think of a website crawl test as a "health checkup" for your site. It’s a simulation that mimics exactly how search engines like Google discover, read, and understand your pages. This process uses a specialized software tool to systematically browse your website, following every link it can find to map out all your URLs.

More importantly, it uncovers the technical roadblocks that could be tanking your search rankings without you even knowing.

Let's use an analogy. Imagine a search engine is a librarian for the entire internet. Before any book (your webpage) can be put on a shelf (indexed) for people to find, the librarian (in this case, Googlebot) has to discover it exists and read it first. A website crawl test lets you see your site through the eyes of that librarian, showing you exactly what’s stopping your most important content from ever being found.

Without this insight, you're flying blind. You can pour hundreds of hours and thousands of dollars into creating brilliant content, but if search engine crawlers can't easily get to it, all that effort is for nothing.

The entire foundation of SEO rests on one simple principle: if a page can't be crawled, it can't be ranked. It's as simple as that.

Every website gets an allocated "crawl budget"—that's the number of URLs Googlebot is willing and able to crawl on your site within a certain period. If your site is a mess of broken links, endless redirect chains, and thin, low-value pages, the crawler wastes its limited resources on that digital junk.

This is a huge problem. It means Googlebot might run out of time and energy before it ever reaches your most critical product or service pages. A well-structured site guides crawlers straight to your best content, making every bit of that crawl budget count. A messy site, on the other hand, forces them to navigate a maze, often leaving your most valuable pages undiscovered.

A proper website crawl test is your first step to fixing this. It helps you find all those inefficiencies. You can learn more about this by exploring the concept of crawl budget optimization to ensure search engines spend their time on what truly matters. In fact, running a thorough crawl is a fundamental part of any comprehensive website health check.

Beyond just budget concerns, a crawl unearths a ton of technical problems that are often completely invisible to the human eye. These issues can directly harm both user experience and your search engine rankings.

Here are a few of the critical problems a crawl will bring to light:

Google even gives you tools to keep an eye on this. The revamped Crawl Stats report in Google Search Console, for example, offers incredibly detailed data on crawl requests, download sizes, and response times. It's a goldmine for diagnosing exactly how Googlebot is interacting with your site. You can discover more insights about the Crawl Stats report to get a better handle on your site's technical performance straight from the source.

Picking the right tool for a website crawl is probably the most critical decision you'll make in this whole process. Seriously. The crawler that works for a solo SEO auditing a small blog is completely different from what an enterprise team needs for a sprawling e-commerce site. Your choice dictates the kind of data you get and, ultimately, the insights you can act on.

The market for these tools is growing fast. In 2023, the web crawling services market was valued at around $579.72 million, and it's only getting bigger. All that growth means you have more options than ever, which is great, but it can also make the decision feel overwhelming.

So, where do you start? The first big fork in the road is deciding between a desktop app or a cloud-based platform.

This is your first major choice. Do you want something that runs on your own machine, or something that runs on someone else's powerful servers?

Desktop crawlers, like the legendary Screaming Frog SEO Spider or Sitebulb, are applications you install right on your computer. They use your machine’s processor, RAM, and internet connection to crawl a site.

On the other hand, cloud-based crawlers are typically part of bigger SEO suites like Ahrefs Site Audit or Semrush Site Audit. They run on remote servers, so they don’t bog down your computer. This one difference has huge implications for cost, power, and convenience.

To help you decide which path is right for you, here’s a quick comparison.

FeatureDesktop Crawlers (e.g., Screaming Frog)Cloud-Based Crawlers (e.g., Ahrefs Site Audit)Resource UsageUses your computer's CPU, RAM, and bandwidth.Runs on remote servers, no impact on your machine.Cost StructureOften a one-time license fee or a freemium model.Typically a monthly or annual subscription (SaaS).ScalabilityCan struggle with very large sites (1M+ pages).Easily handles massive websites with millions of pages.AutomationManual setup required for each crawl. Scheduling is limited or non-existent.Built for scheduled, recurring crawls to monitor health over time.AccessibilityData is stored on your machine; hard to share with a team.Data is stored in the cloud, easily accessible by your whole team.ConfigurationHighly customizable with deep, sometimes complex, settings.More user-friendly, guided setup. Less granular control.

Ultimately, there’s no single "best" option—just the best fit for your specific needs, budget, and the size of the websites you're analyzing.

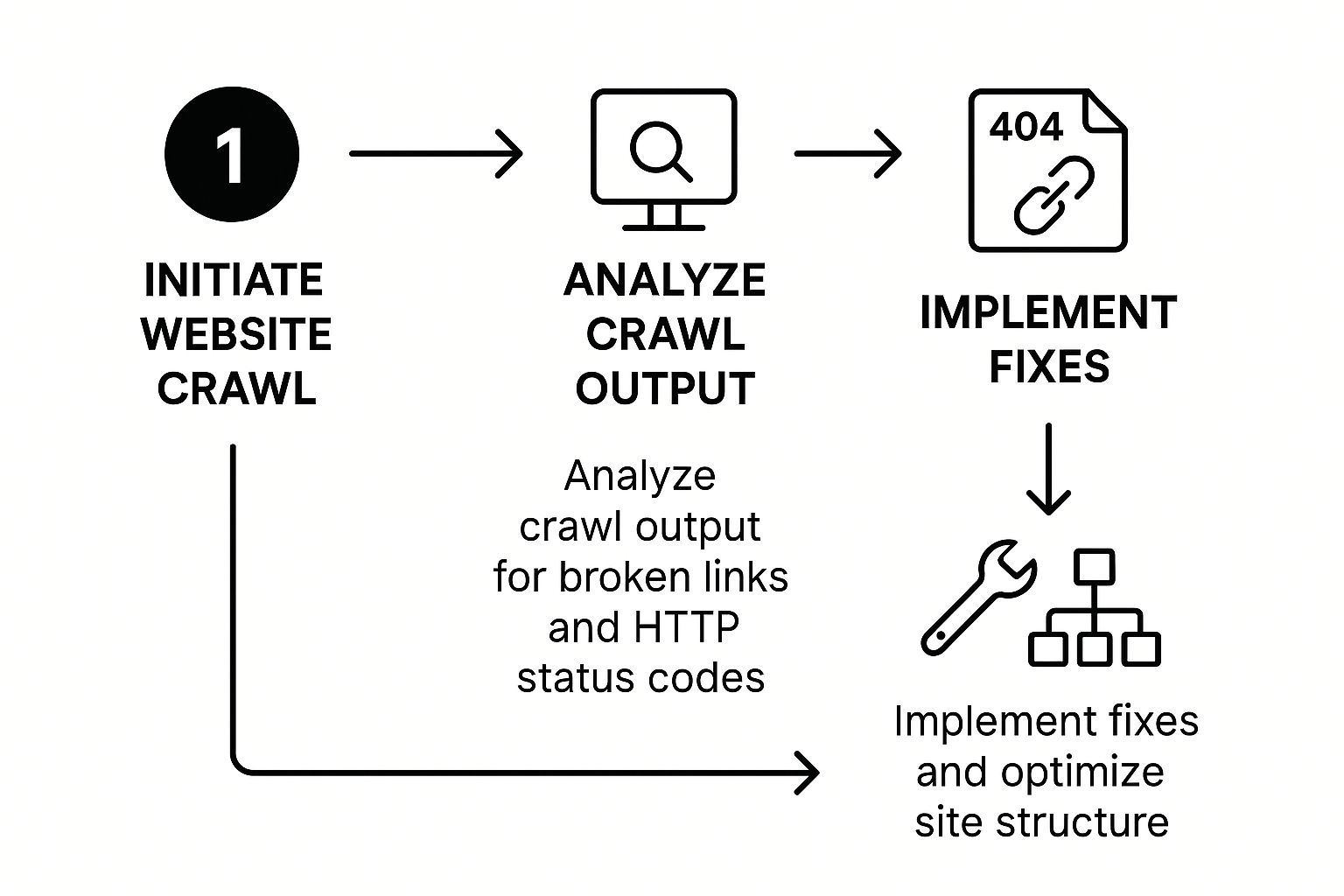

This image shows the general workflow you'll follow, no matter which tool you end up choosing.

As you can see, the process always flows from discovery and crawling to analysis and action. The tool is just the vehicle.

When you're weighing your options, it really comes down to a few practical questions.

As you explore, you might also find specialized tools that blend traditional crawling with other types of analysis. For example, a tool like the Lighthouse Crawler can run performance checks on every URL it finds, giving you both SEO and Core Web Vitals data in one go. It’s a great example of how different tools can solve specific problems.

Alright, you've picked your crawling tool. Now it's time to put it to work.

Getting your first crawl test running is about more than just plugging in a URL and hitting "Go." The real magic happens in the setup—that’s where you fine-tune the crawl to pull back the exact data you need to make smart decisions.

The first step is always the easiest: pop in your website’s starting URL. Think of this as the "seed" where the crawler begins its journey, following every link it can find to map out your entire site.

Before you let the crawler loose, a few key settings can mean the difference between a goldmine of insights and a complete waste of time. Or worse, a crashed server.

First up is crawl speed. You’ll see this setting labeled as "threads" or maybe "rate limit." It controls how many requests per second the crawler pings your server with. Set it too high, and you can easily overwhelm your server, slowing down the site for actual human visitors or even knocking it offline entirely.

Pro Tip: For your very first website crawl, always start with a low crawl speed. I’m talking 1-2 threads. It’s far better to have a slow, successful crawl than a fast, destructive one. You can slowly crank up the speed on later crawls once you get a feel for what your server can handle.

Next, you need to decide what to do about your robots.txt file. By default, most crawlers will respect the rules in your robots.txt—just like Googlebot does. This is perfect for seeing what search engines are allowed to see.

But what if you’re accidentally hiding important pages? To get a truly complete picture of every single URL on your site, you can configure the crawler to ignore robots.txt. This is a fantastic way to find pages that were blocked by mistake and get them back in front of search engines.

Once you've got the basics down, you can start refining your crawl to uncover more specific, nuanced insights. These advanced configurations help you see your site exactly the way a search engine does.

A powerful technique is to change the user-agent. A user-agent is just a string of text that identifies the bot to the server. By switching your crawler's user-agent to Googlebot, you can check if your server is treating Google differently than it treats regular users—a sneaky practice known as cloaking.

Another absolutely critical setting is JavaScript rendering. So many modern sites use JavaScript to load content, from e-commerce product listings to entire blog posts. If your crawler can’t render JavaScript, it might just see a blank page, completely missing huge chunks of your website. Toggling this feature on ensures the crawler sees the final, fully-rendered version of your pages, just like a user would.

Finally, think about the scope of the crawl itself. Depending on your tool, you can manage how aggressively it hunts for new pages. Here are a few settings to look at:

blog.yoursite.com) in the audit?Getting the configuration right is everything. It focuses your crawl on the data that matters most while keeping your server happy. For anyone managing a large or frequently updated site, it’s also worth learning how to increase your Google crawl rate, which helps ensure search engines see your changes as quickly as possible.

Once your website crawl finishes, you're looking at a mountain of data. This is the exact moment where most people freeze, totally unsure of where to even start.

The secret isn’t to try and fix everything at once. You have to think like an emergency room doctor and triage the issues based on severity. Address the life-threatening problems first.

In the world of SEO, the most critical issues are the ones that stop search engine bots and users dead in their tracks. These are your absolute top priority because they directly block pages from being crawled and indexed. If you only fix one thing, make it one of these.

The first thing you should do is filter your crawl report to show only response codes. Your initial targets are the real “showstoppers”—anything that signals a complete failure to access a page.

Here’s where to focus your energy first:

After you’ve handled those, turn your attention to redirect issues. While a single 301 redirect is perfectly fine, redirect chains are a major headache. That’s when a page redirects to another page, which then redirects again. These chains slow your site to a crawl and can seriously dilute the authority passed between pages.

A classic mistake is getting bogged down in low-priority details like missing alt text while hundreds of pages are throwing 404 errors. Always stabilize the patient first. Fix what's fundamentally broken before you start optimizing what's already working.

This priority-based approach turns a daunting spreadsheet into a clear, actionable to-do list. Once you've tackled these foundational problems, you can confidently move on to other important issues, like pages that might be hurting your indexing efforts. If you're looking for ways to speed things up, our guide on achieving instant indexing for new and updated content is a great next step.

With the critical access issues out of the way, you can now shift your focus to the on-page elements that directly influence rankings and click-through rates. These are the details that help search engines understand your content and, just as importantly, persuade users to actually click.

Start by sorting your crawl data to hunt for common on-page mistakes:

Your analysis also needs to account for the growing diversity of web crawlers hitting your site. A recent report highlighted an 18% global spike in crawler traffic, with bot traffic from newcomers like GPTBot exploding by 305%. This means your server is getting hit by a lot more than just Googlebot, which impacts everything from your server load to data privacy. You can discover more insights about the rise of AI crawlers and see how it affects your analysis.

Once you get past fixing basic 404s and redirect chains, a standard, one-size-fits-all website crawl starts to lose its edge. The real, game-changing insights don't come from a full site audit. They come from tailoring your crawls to answer specific, strategic questions about your site's performance.

This is where you graduate from simple audits to using your crawler as a powerful diagnostic tool.

Instead of crawling your entire domain every single time, start running segmented crawls. This just means telling the crawler to focus on one specific part of your site—a subdirectory, a subdomain, or even a list of URLs that share a common pattern. For instance, you could run a crawl only on your /blog/ subdirectory to see how your content marketing is holding up.

This focused approach makes your analysis much faster and way more effective. It lets you zero in on issues unique to certain site sections. Think of it like this: you can spot widespread hreflang errors in your German de-de/ language folder without all the noise from the rest of the site getting in the way.

A list of technical errors is just that—a list. It's not valuable until you connect it to what actually matters: performance. This is where integrating your crawl data with other platforms like Google Analytics (GA4) and Google Search Console (GSC) becomes so critical.

Most advanced crawlers have API connections or allow you to import data from these sources. By layering GSC performance data over your crawl results, you can instantly see which broken pages are actually losing organic clicks. Combine that with GA4 data, and you’ll know which redirect chains are wrecking the user journey on your most important conversion paths.

This integration shifts your perspective from just "fixing errors" to "prioritizing fixes that will recover the most traffic and revenue." It’s the difference between being a technician and being a strategist.

The most effective SEOs don't just perform a one-off website crawl test; they build a system for continuous monitoring. Setting up automated, scheduled crawls—say, on a weekly basis—is the best way to catch new issues before they can drag down your rankings. This turns your crawler into a 24/7 watchdog for your site's technical health.

After you've pushed your fixes live, you might also want to request Google to crawl your site to hopefully speed up the validation process and see the impact of your changes sooner.

Finally, do not underestimate the power of crawl visualizations. Many tools can generate interactive diagrams of your site’s internal linking structure. These force-directed graphs are the single best way to truly understand your site architecture. You can spot orphaned page clusters and see exactly where link equity is—or isn't—flowing.

Mastering crawl tests is a huge piece of the puzzle, but it's smart to explore other essential website testing techniques for a complete picture of your site's health. These advanced methods give you the deeper understanding you need to be truly proactive with your SEO.

Even with the best plan in place, a few questions always pop up once you start running your own website crawl tests. Let's walk through some of the most common ones I hear to clear up any confusion and get you crawling with confidence.

The honest answer? It depends entirely on how dynamic your website is.

For a large e-commerce site with thousands of products and daily inventory updates, a weekly crawl is a smart move. This rhythm helps you spot problems like new 404s from removed product pages almost as soon as they happen.

On the other hand, for a smaller, more static site like a local business brochure or a personal blog, a monthly or quarterly crawl is usually plenty.

But here’s the non-negotiable rule: always run a fresh website crawl test after any significant change. This means a site redesign, a platform migration (like moving from Shopify to WooCommerce), or a huge content rollout. That immediate checkup is your best insurance against introducing critical errors during an update.

Yes, it’s possible—but only if you get careless with your settings.

An aggressive crawl hammers your server with a high volume of requests in a very short time. This can easily overwhelm your hosting resources, leading to a painfully slow site for real users or, in a worst-case scenario, taking it offline completely.

This is exactly why adjusting the crawl speed is so critical. You'll often see this setting labeled as "threads" or "rate limit" in your tool. While most modern crawlers have safe default settings, if you're ever in doubt, always start slow. Kicking off a crawl with just one or two concurrent threads is the way to go.

It's much better to have a slow, successful crawl than a fast, destructive one.

This is a fundamental concept that trips up a surprising number of people. It’s actually pretty simple when you break it down.

Think of it like a librarian managing a massive library:

A page must be crawled before it can even be considered for indexing. To really get a handle on this crucial relationship, you should learn more about how search engine indexing works and see how it’s directly tied to your site's crawlability.

Are you tired of waiting for search engines to find your new content? IndexPilot automates the indexing process by instantly notifying Google and Bing the moment you publish or update a page. Ensure your latest content gets seen faster, improve your rankings, and drive more traffic with zero manual effort. Start your free 14-day trial at https://www.indexpilot.io today.